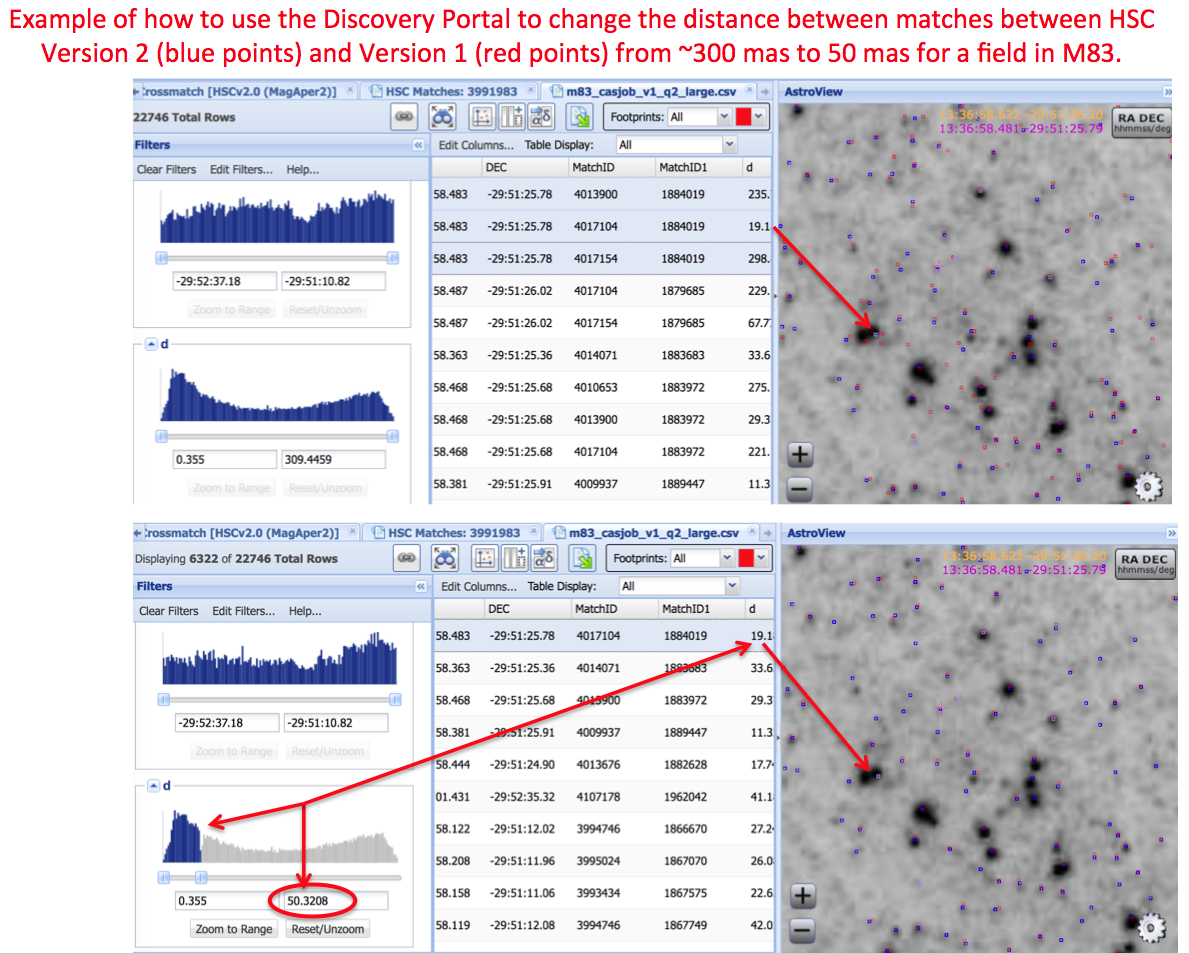

-

What are specific limitations and artifacts that HSC users should be aware of?

NOTE: Unless otherwise noted, the graphics in this section are taken from version 1.

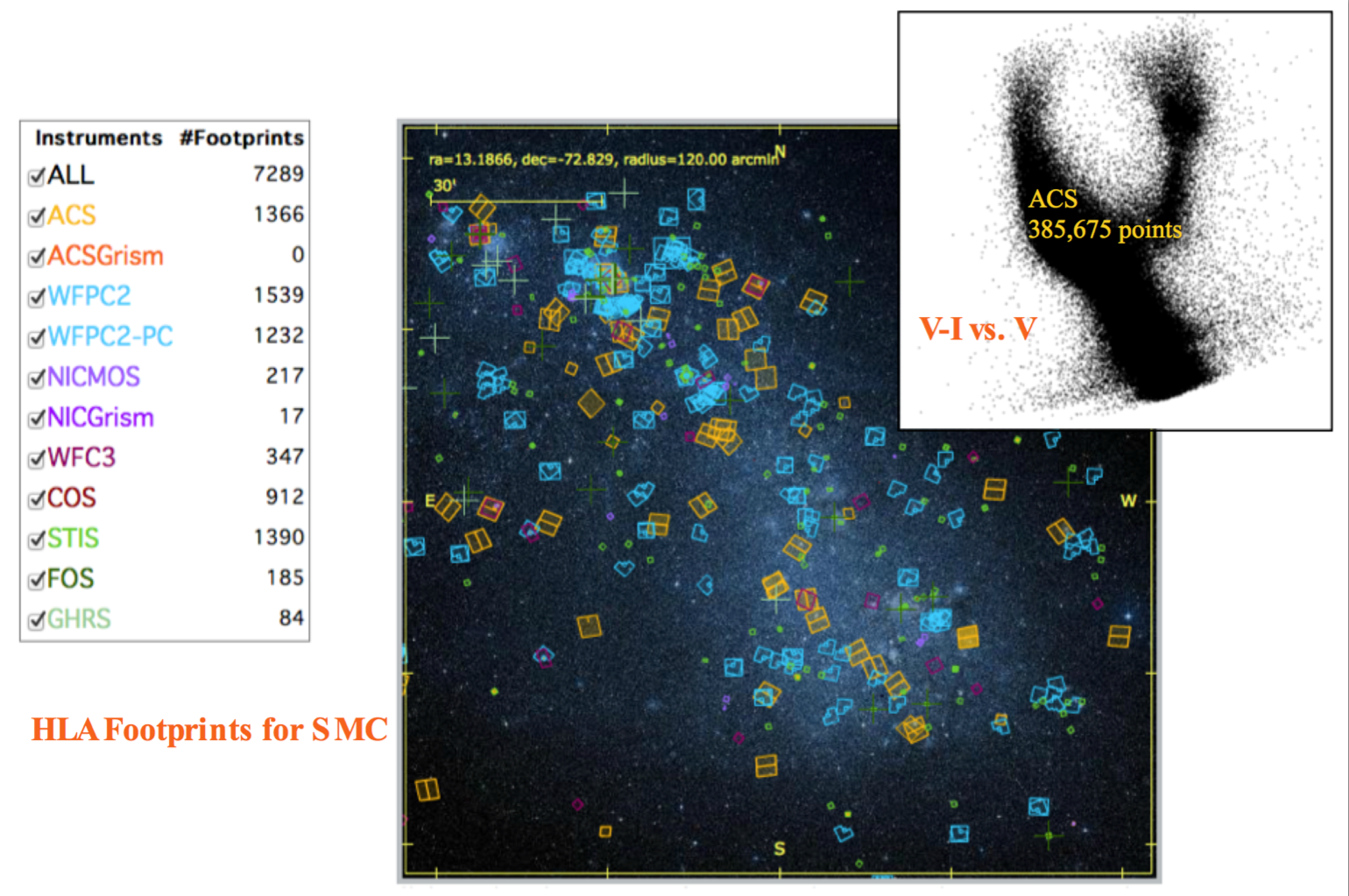

The Hubble Source Catalog is composed of visit-based,

general-purpose source lists from the Hubble Legacy Archive (hla.stsci.edu) .

While the catalog may be sufficient to accomplish the science

goals for many projects, in other cases astronomers may need to make

their own catalogs to achieve the optimal performance that is possible

with the data (e.g., to go deeper). In addition, the Hubble observations

are inherently different than large-field surveys such as SDSS, due to

the pointed, small field-of-view nature of the observations, and the

wide range of instruments, filters, and detectors. Here are some of the

primary limitations that users should keep in mind when using the HSC

.

LIMITATIONS:

Uniformity:

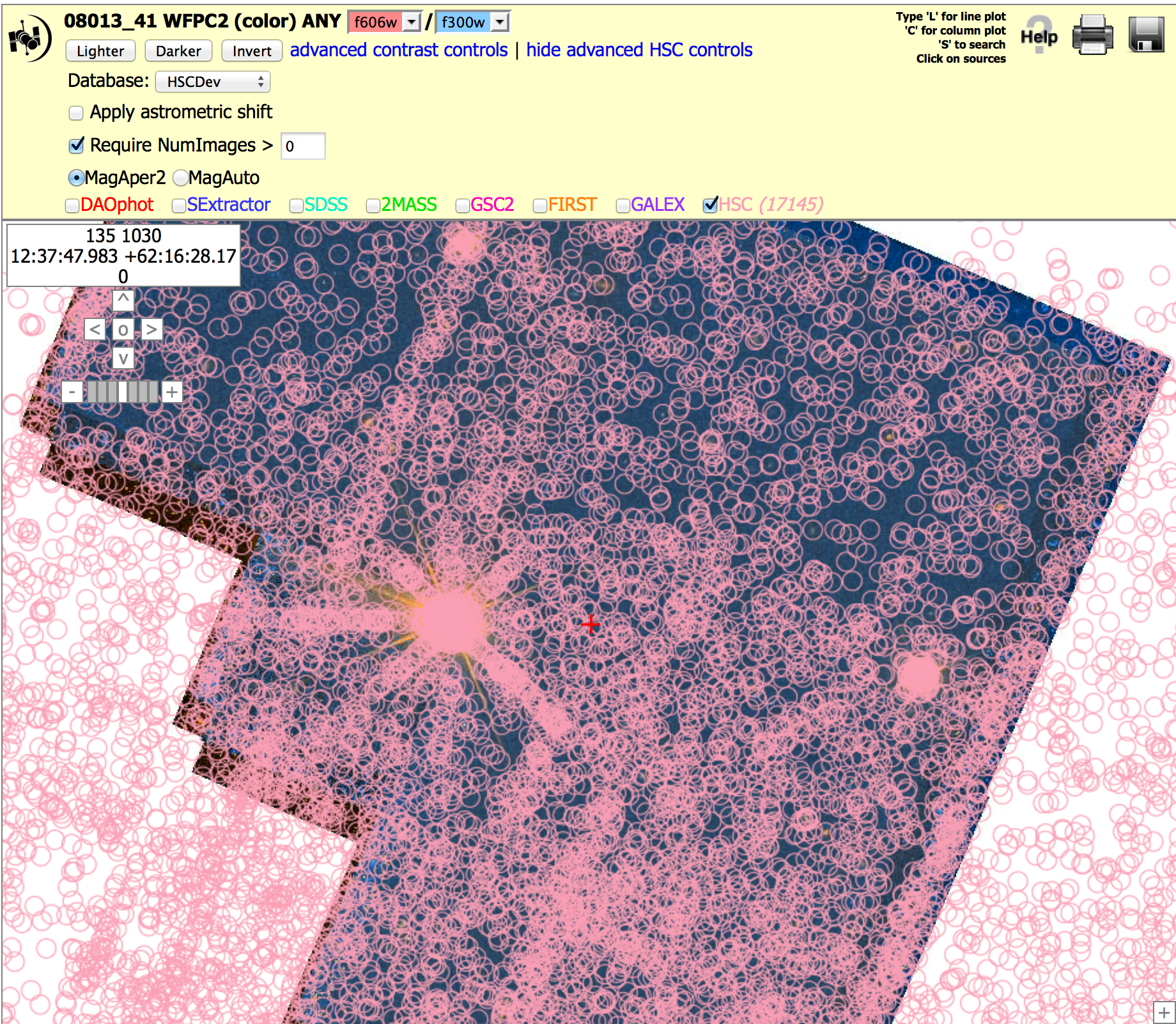

Coverage can be very non-uniform (unlike

surveys like SDSS), since a wide range of HST instruments,

filters, and exposure times have been combined.

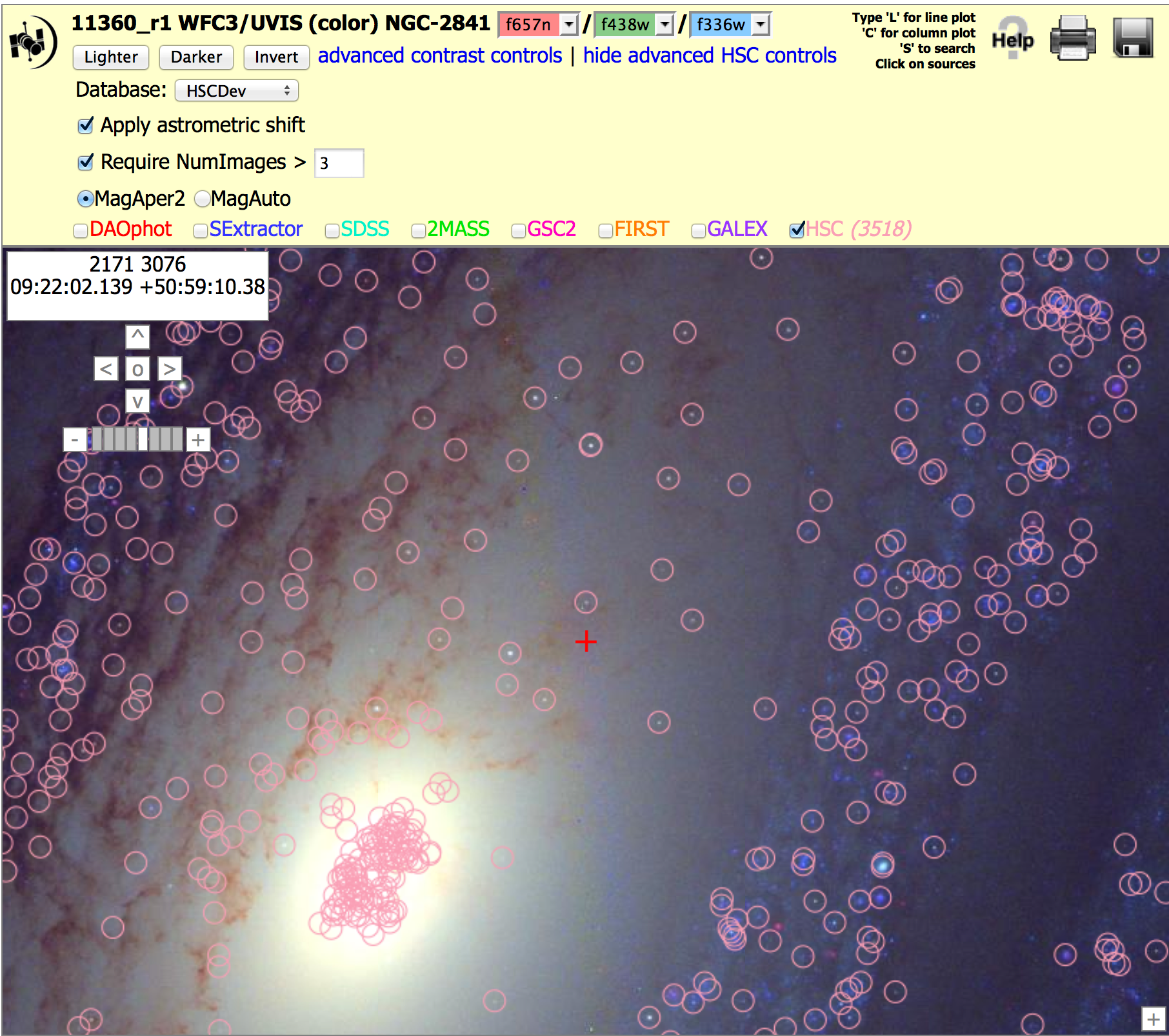

We recommend that users pan out to see the full HSC field when

using the Interactive Display in order

to have a better feel for the uniformity of a particular dataset.

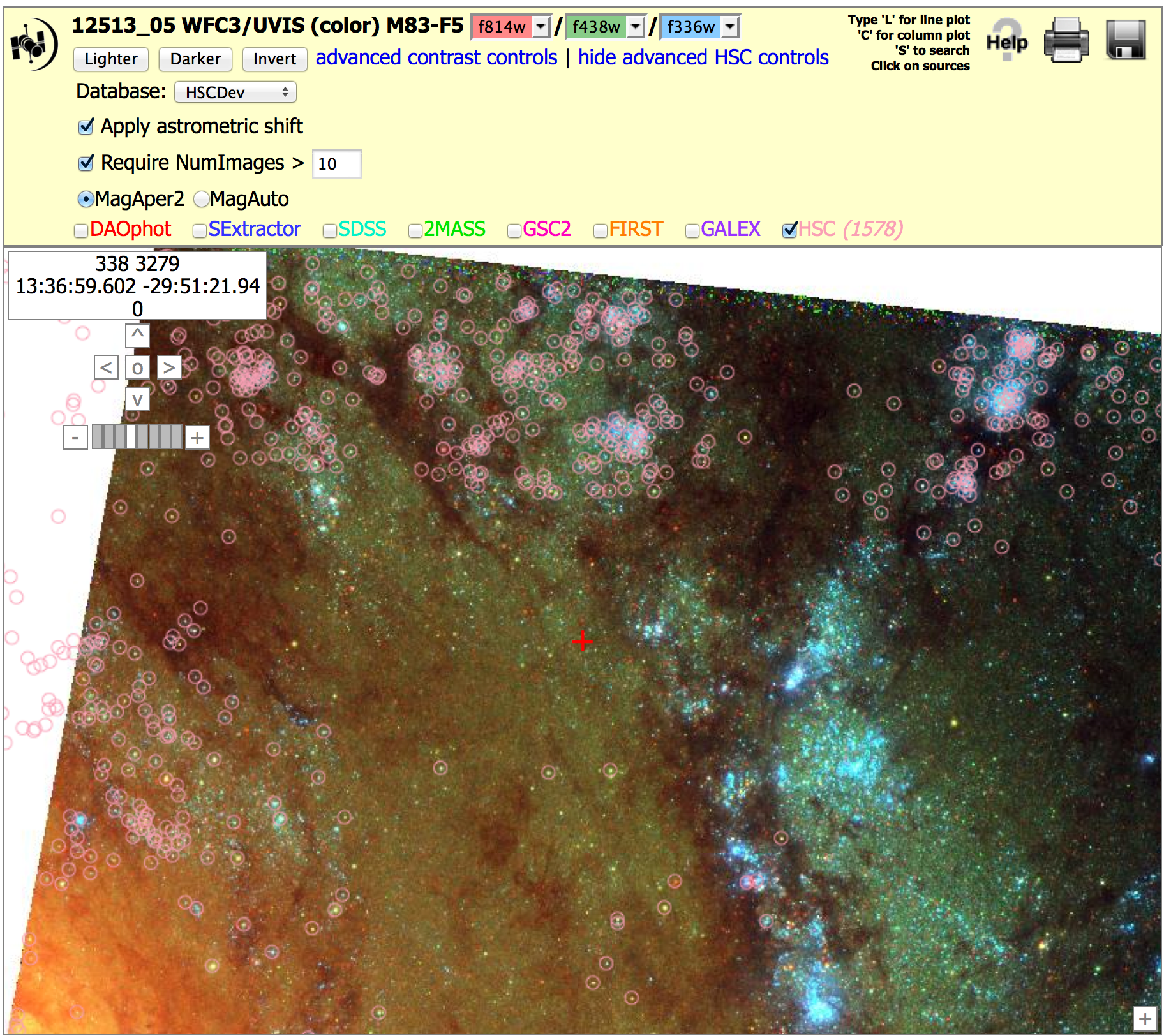

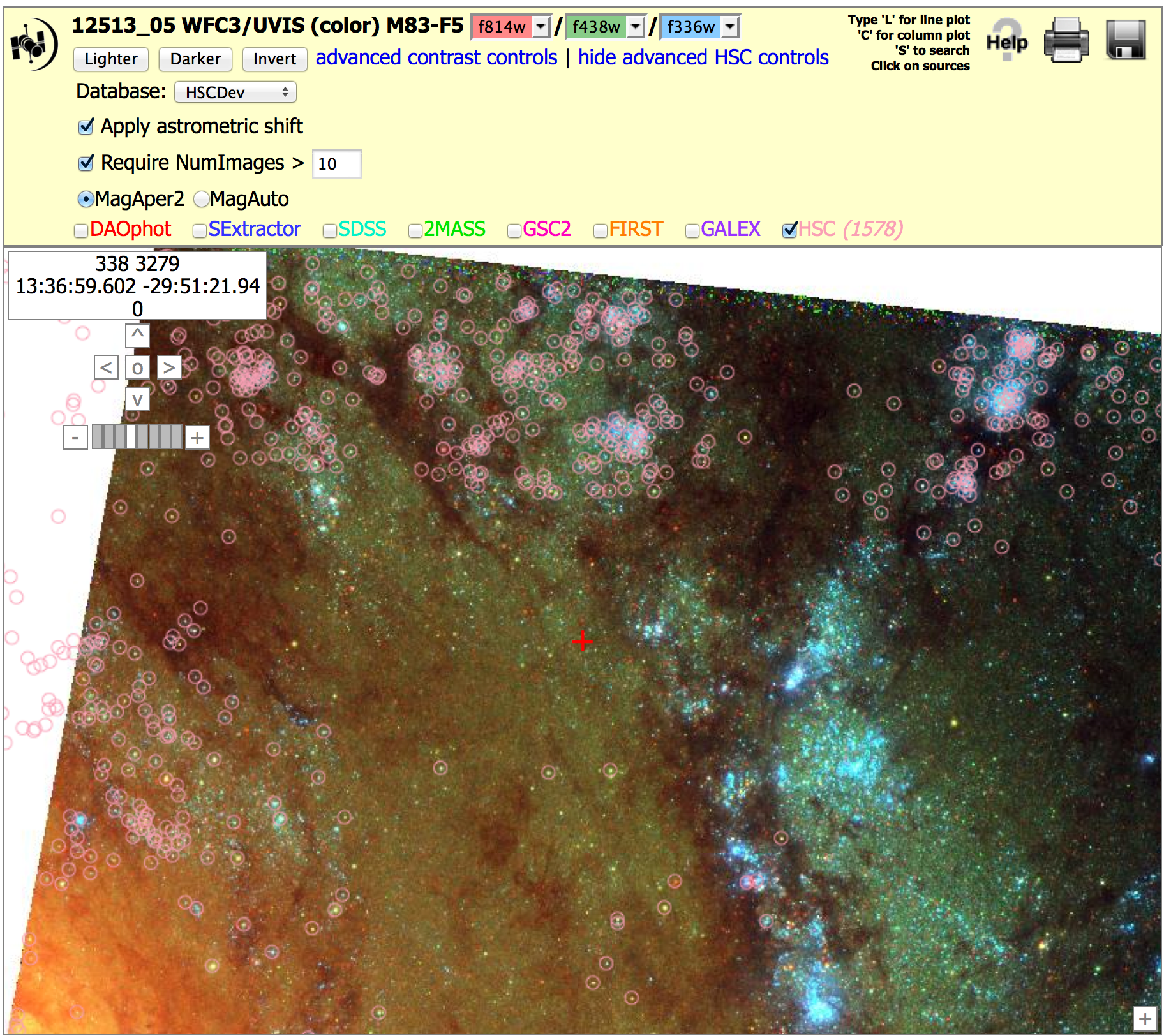

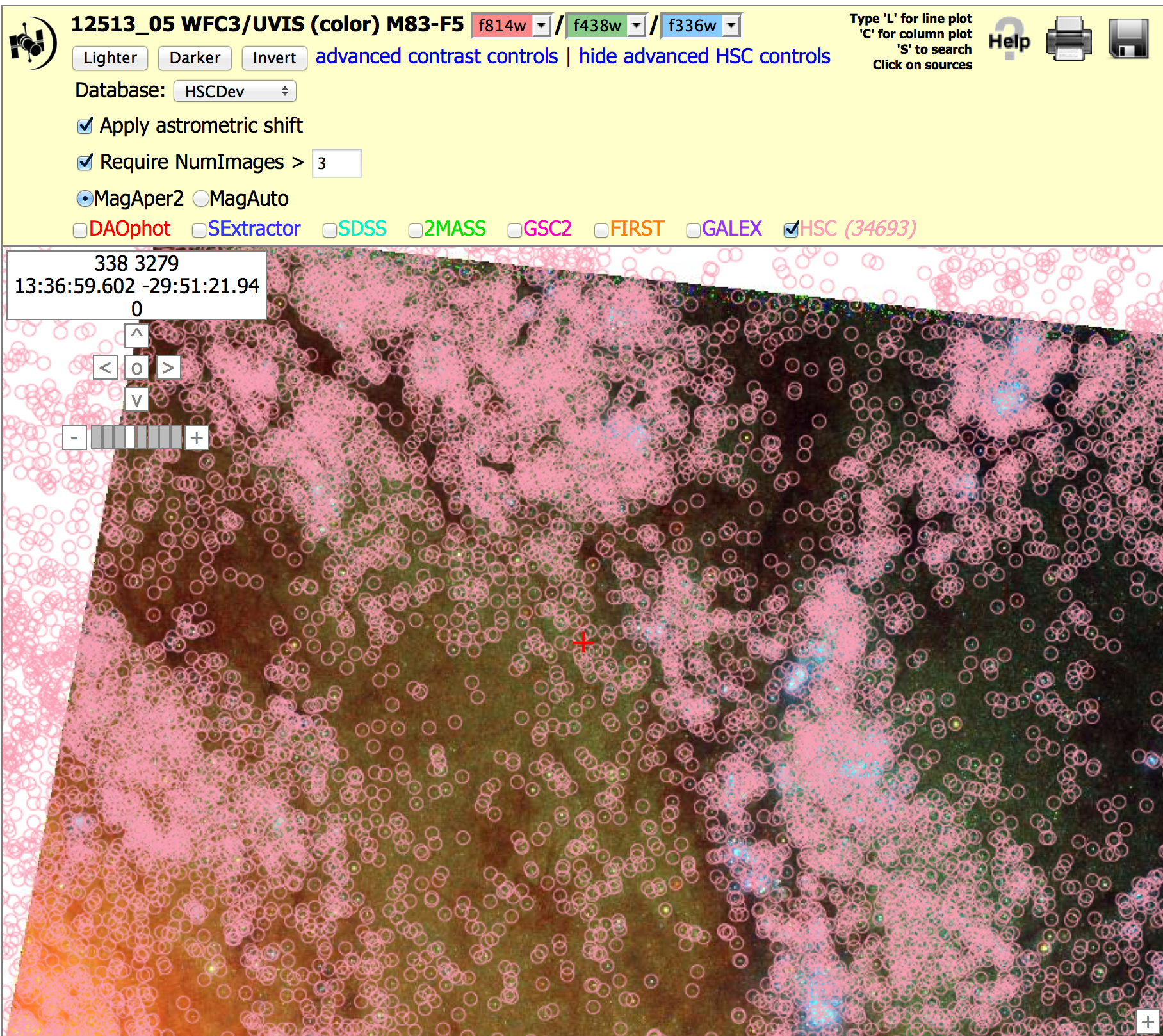

Adjusting

the value of NumImages used for the search can improve the uniformity in many cases.

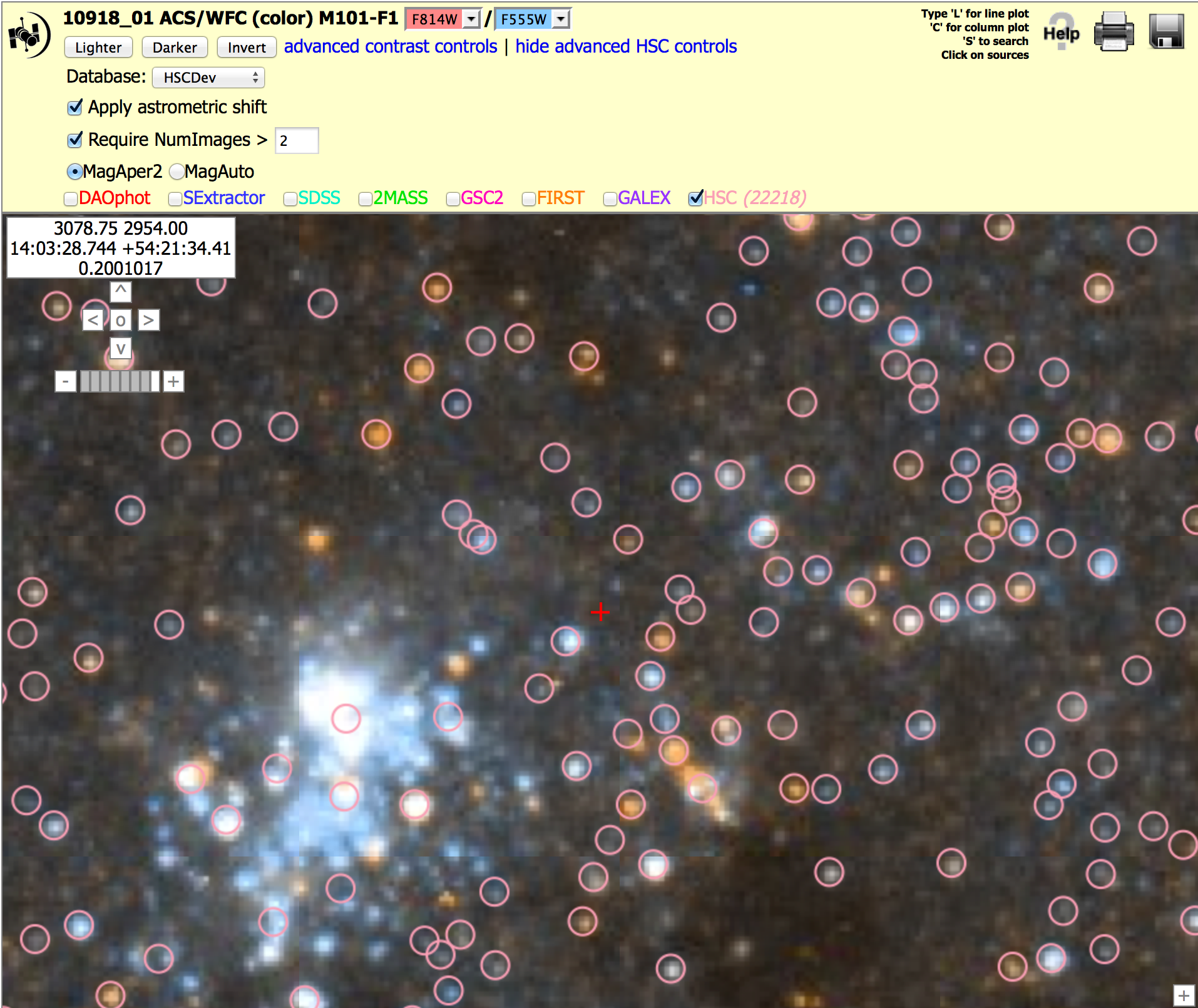

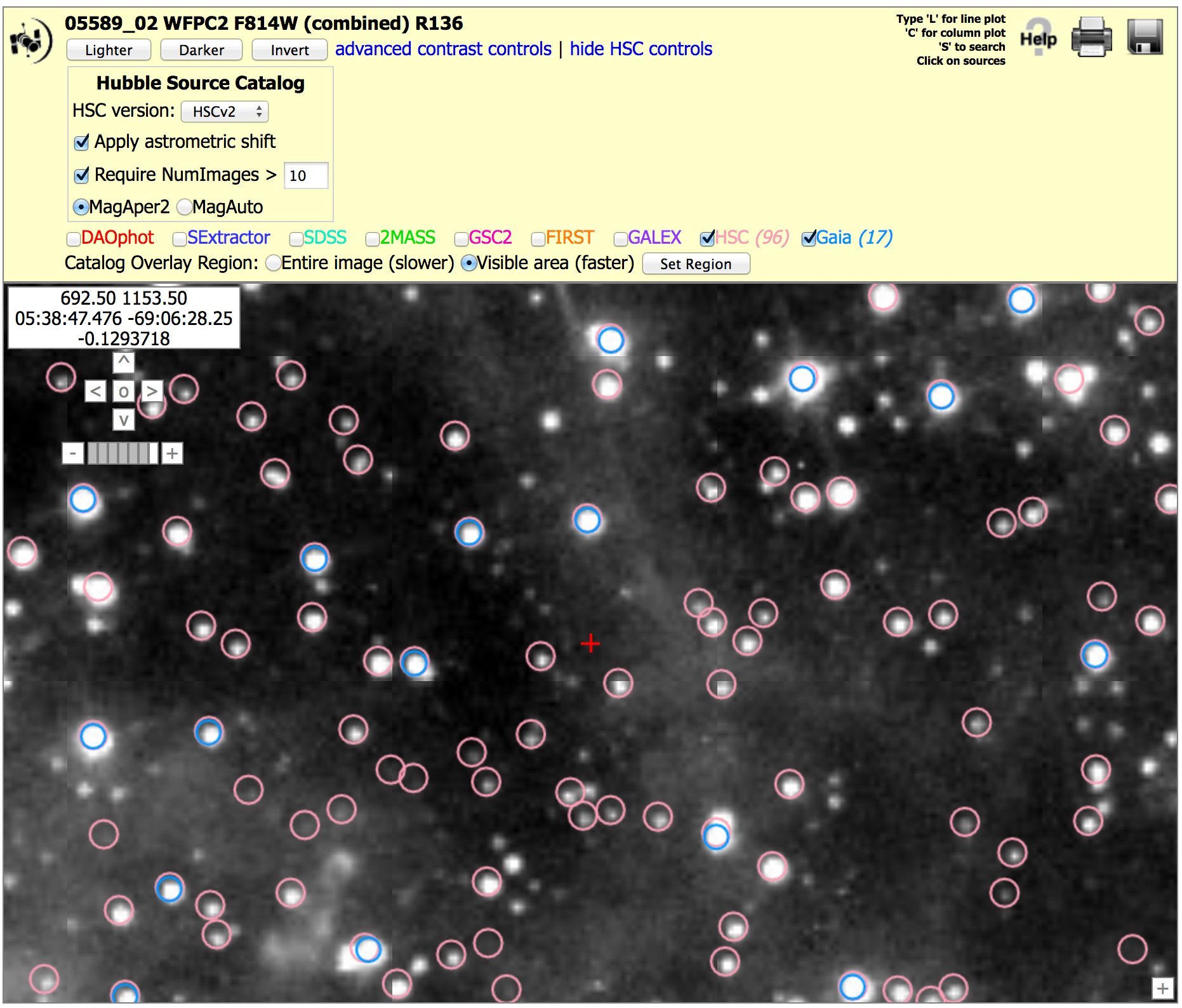

See image below for an example.

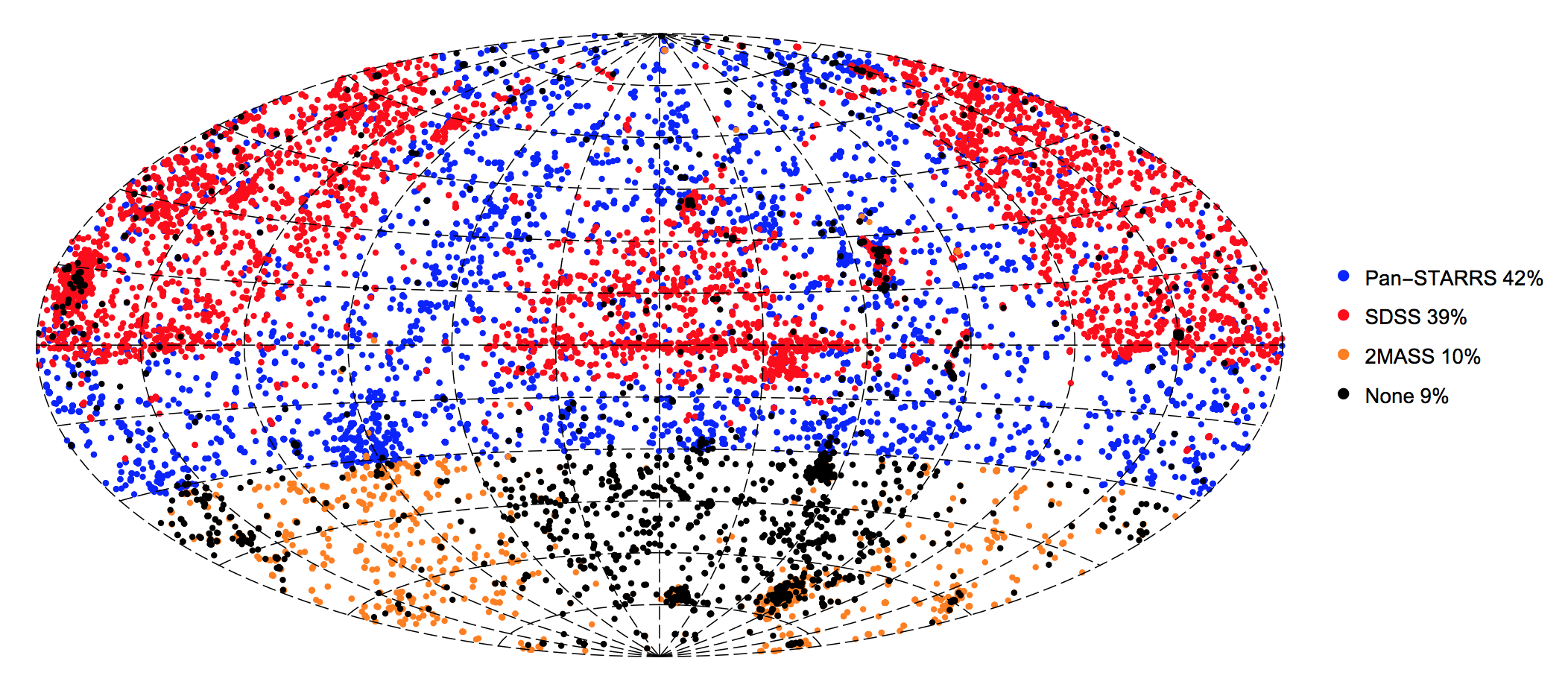

Astrometric Uniformity:

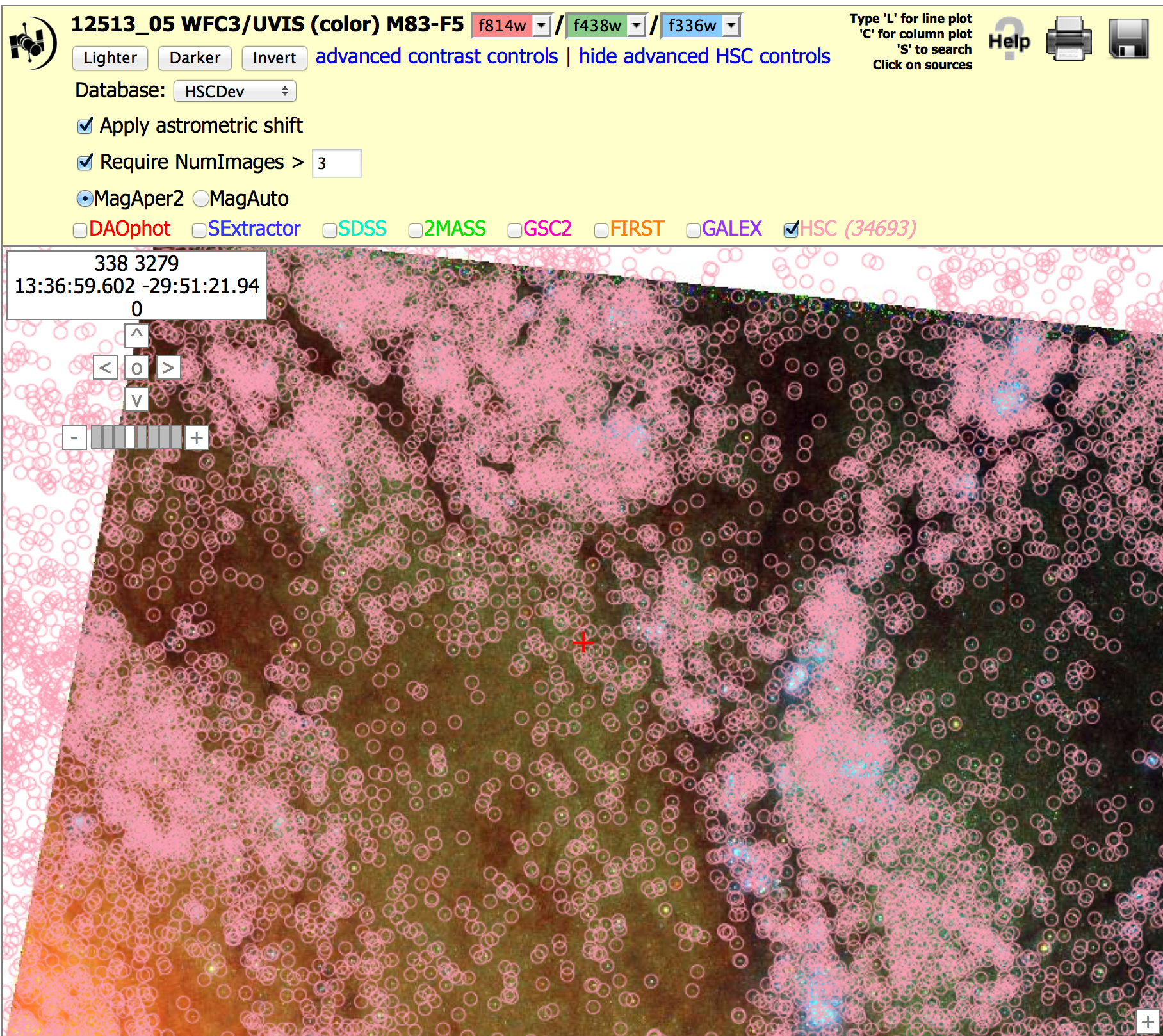

In version 2, about 91% of HSC images have coverage in Pan-STARRS, SDSS, or 2MASS that

permits absolute astrometric corrections of the images

(i.e., AbsCor = Y) to a level of roughly 0.1 arcsec.

See (Whitmore

et al. 2016) for more details about astrometry, and to see the

corresponding map for version 1. We note that in version 2 we changed

the algorithm to use all three datasets, weighted by their goodness of

fit. For the figure we show only the dataset with the best-fit

value. This tends to weight SDSS more heavily than in version 1 .

Depth:

The HSC does not go as deep as it is possible to go.

This is due to a number of different reasons, ranging from using an

early version of the WFPC2 catalogs (see "Five things you should know about the HSC" ), to the

use of visit-based source lists rather than a deep mosaic

image where a large number of images have been added together.

Completeness:

The current generation of HLA WFPC2

Source Extractor source lists have problems finding

sources in regions with high background.

The ACS and WFC3 sources lists are

much better in this regard.

The next generation of WFPC2

sources lists will use the improved ACS and WFC3

algorithms, and will be incorporated into the HSC in the future.

Visit-based Source Lists:

The use of visit-based, rather than deeper

mosaic-based source lists, introduces a number of limitations. In

particular,

much fainter completeness limits, as discussed

in Use Case # 1. Another important limitation imposed by this approach

is that different source lists are created for each visit, hence a

single, unique source list is not used. A more efficient method

would be to build a single, very deep mosaic with all existing HST

observations, and obtain a source list from this image. Measurements at

each of these positions would then be made for all of the separate

observations (i.e., "forced photometry"). This approach will be

incorporated into the HSC in the future.

ARTIFACTS:

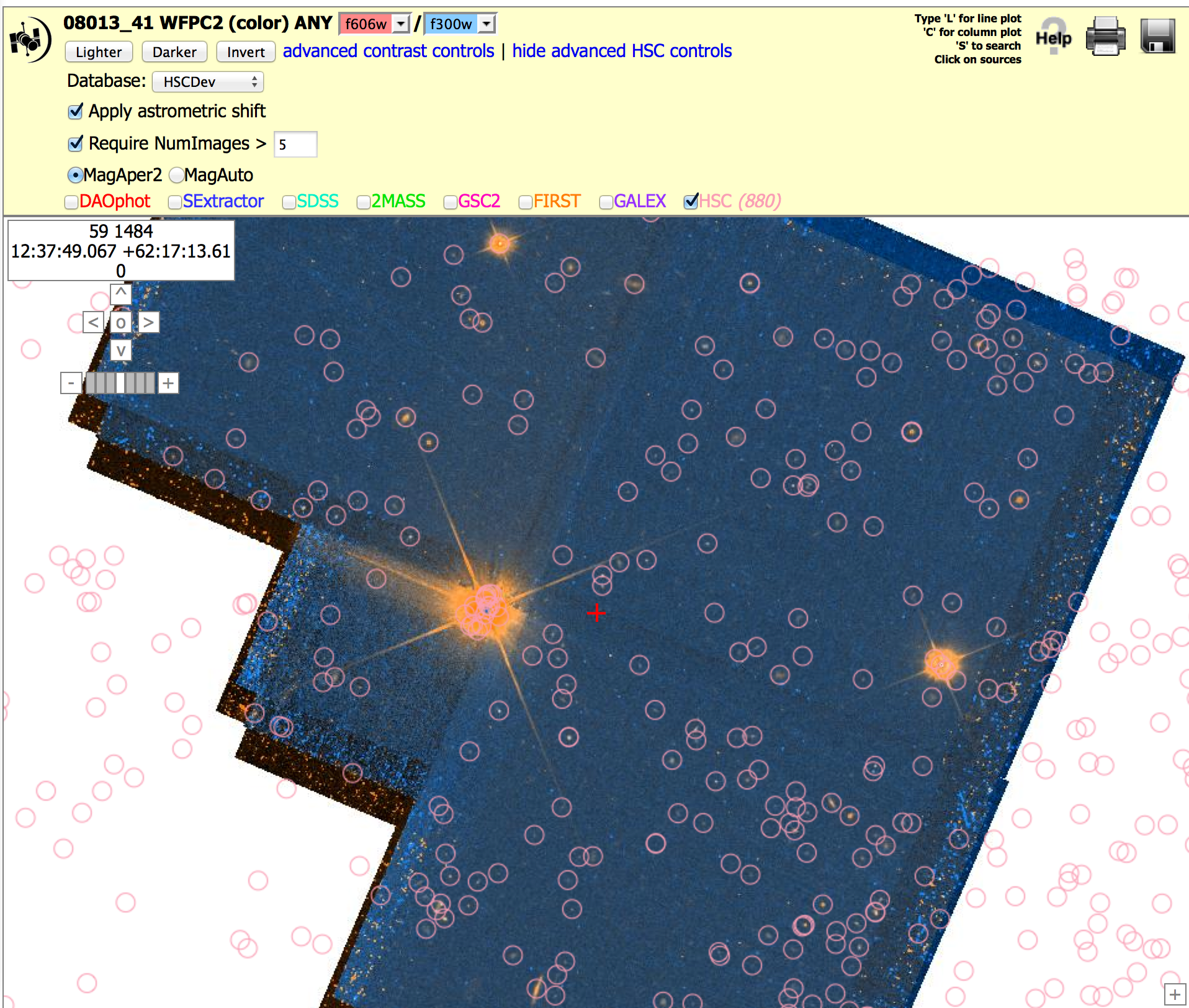

False Detections:

Uncorrected cosmic rays are a common cause of blank sources.

Such artifacts can be removed by requiring that the detection be based on more than one image.

This constraint can be enforced by requiring NumImage > 1.

Another common cause of "false detections" is the attempt by

the detection software to find large, diffuse sources.

In some cases this is due to

the algorithm being too aggressive when looking for these objects

and finding noise. In other cases the objects are real,

but not obvious unless observed with the right

contrast stretch and

field-of-view.

It is not easy to filter out these

potential artifacts without loosing real objects. One

technique users might try is to use a size criteria (e.g.,

concentration index = CI) to distinguish real vs. false

sources.

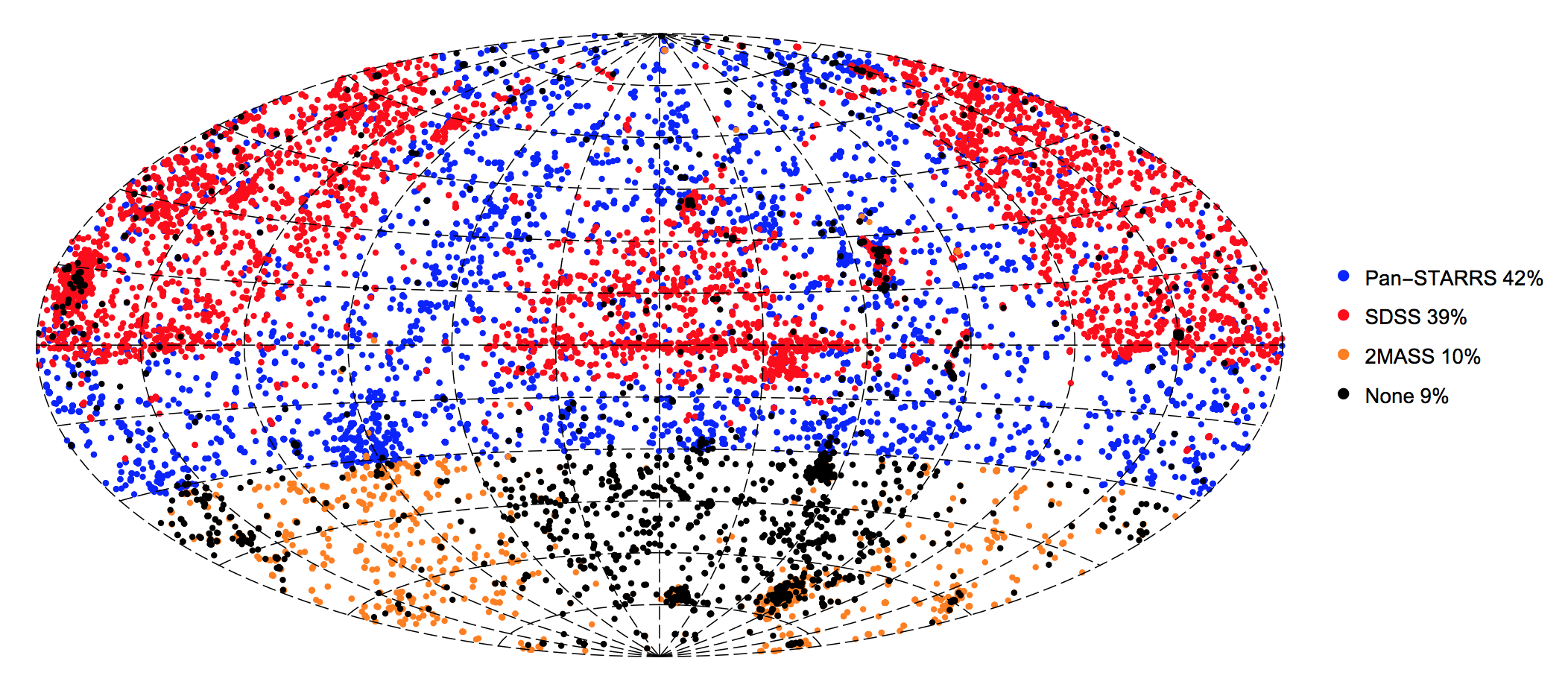

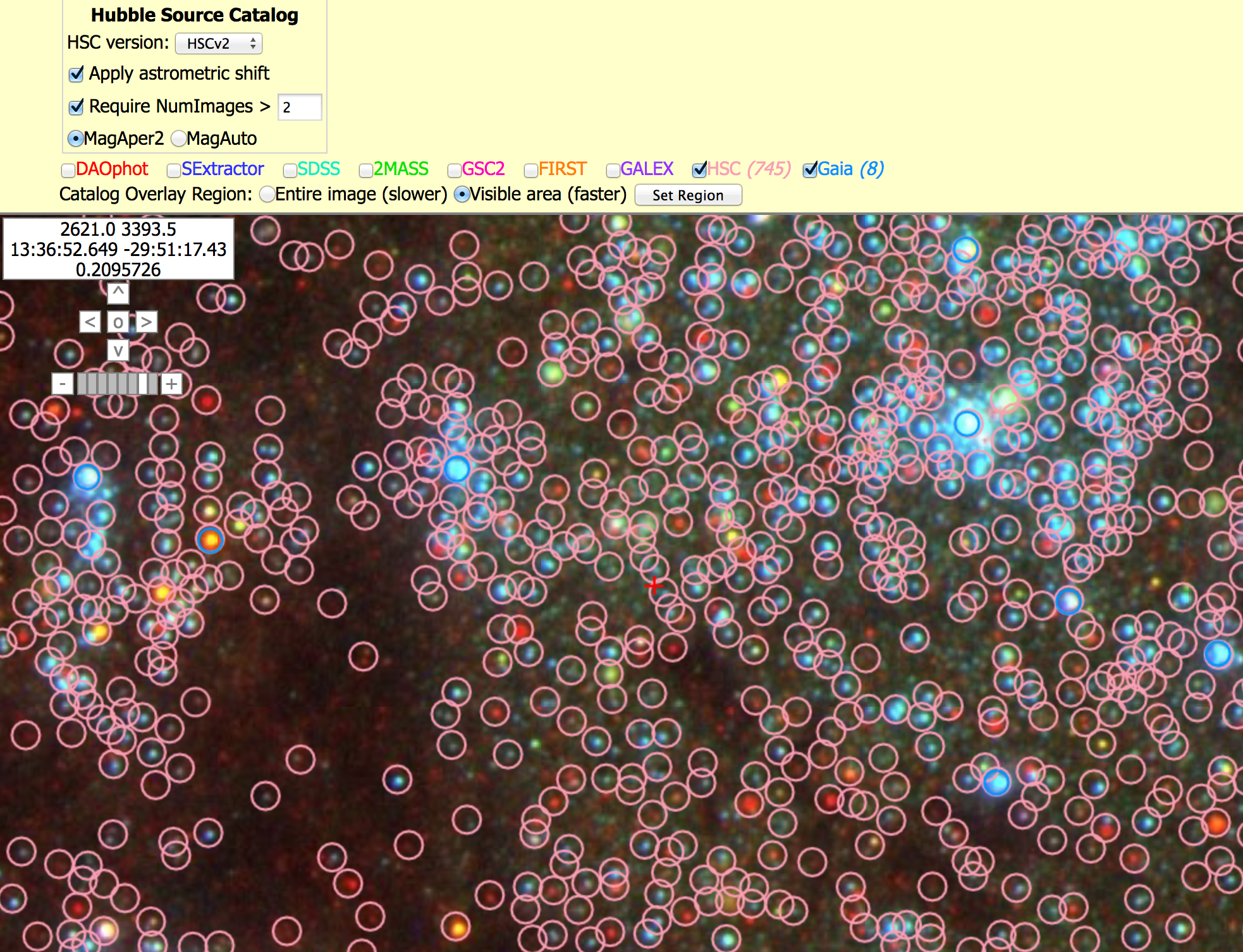

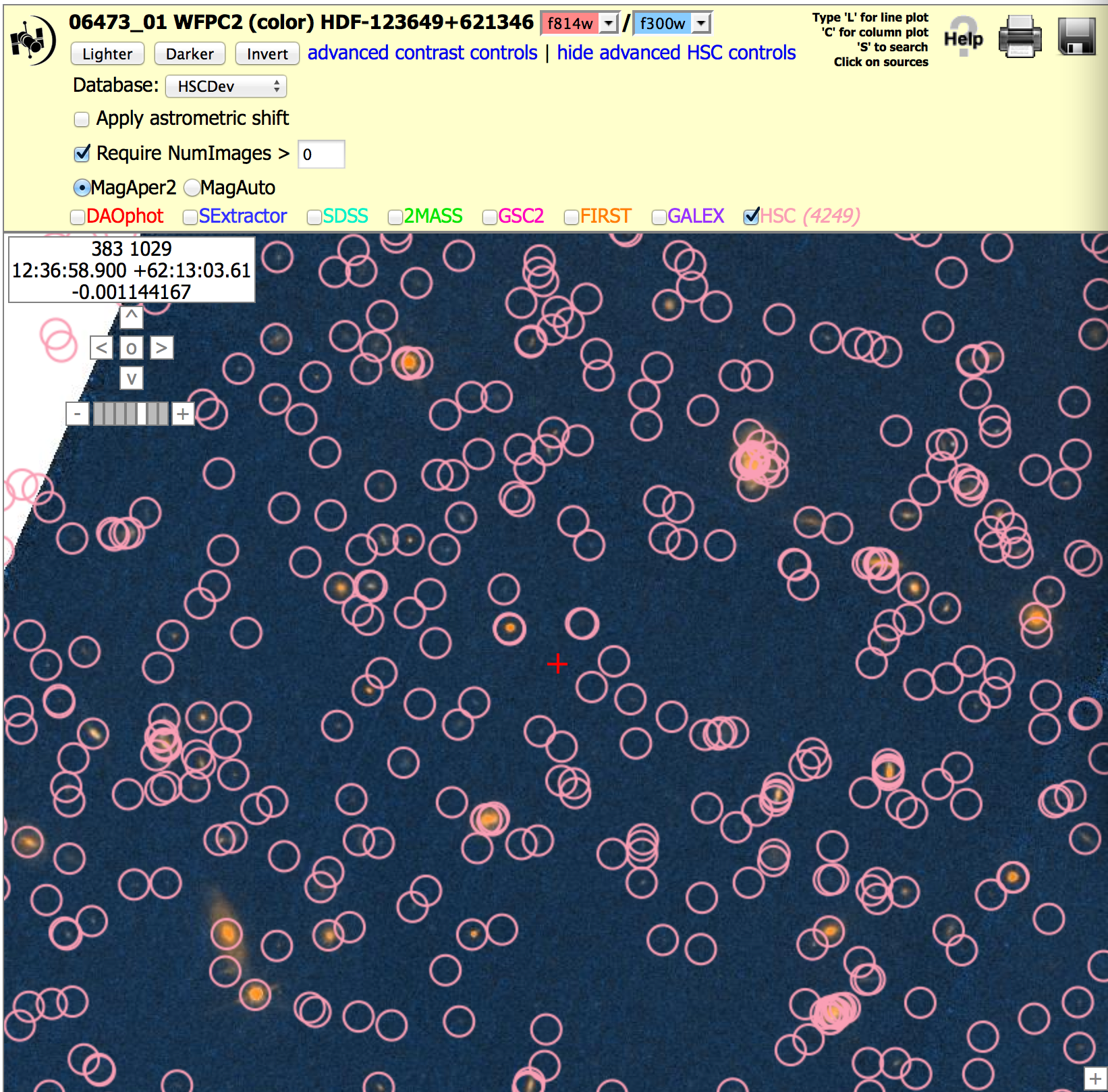

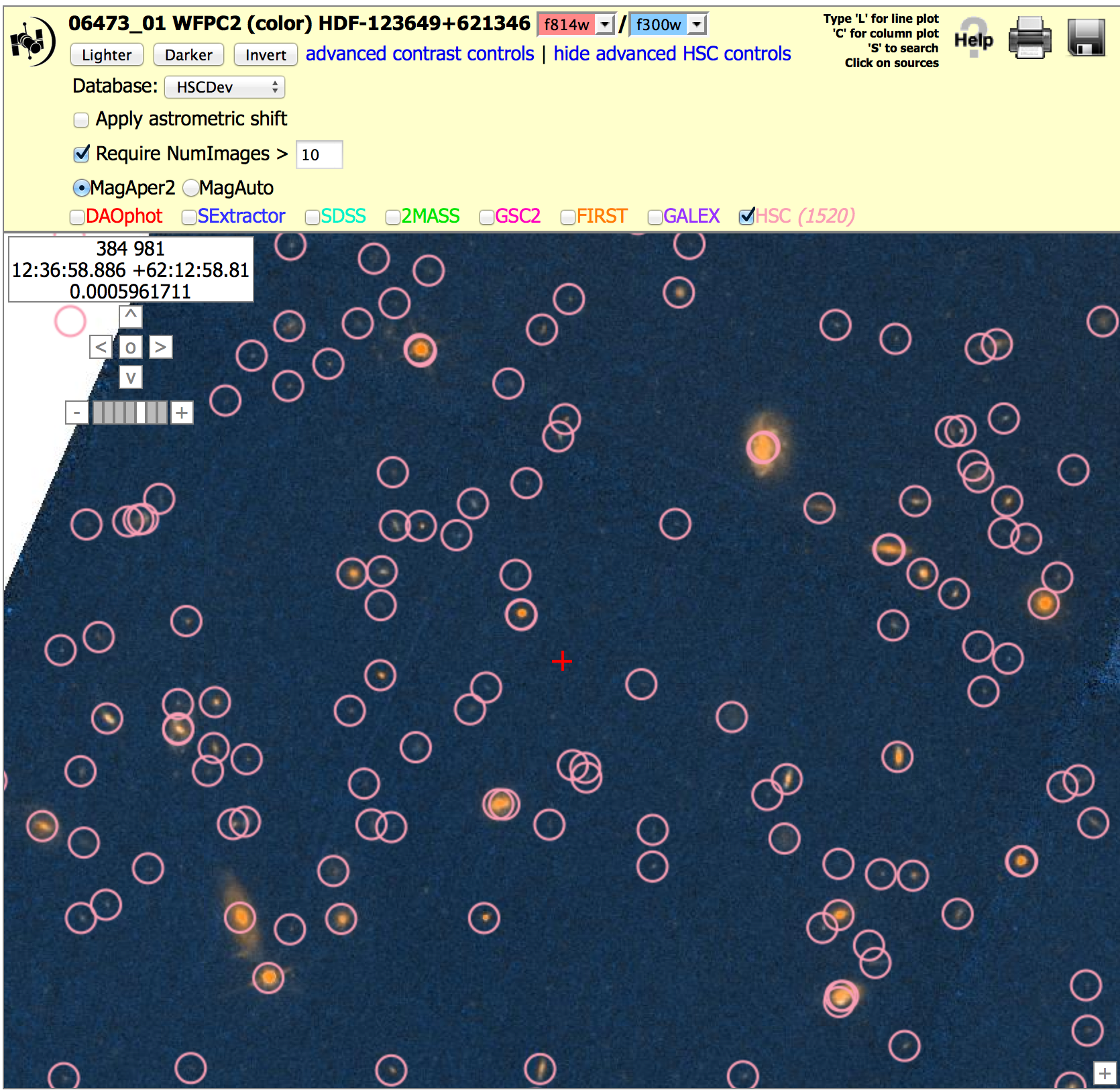

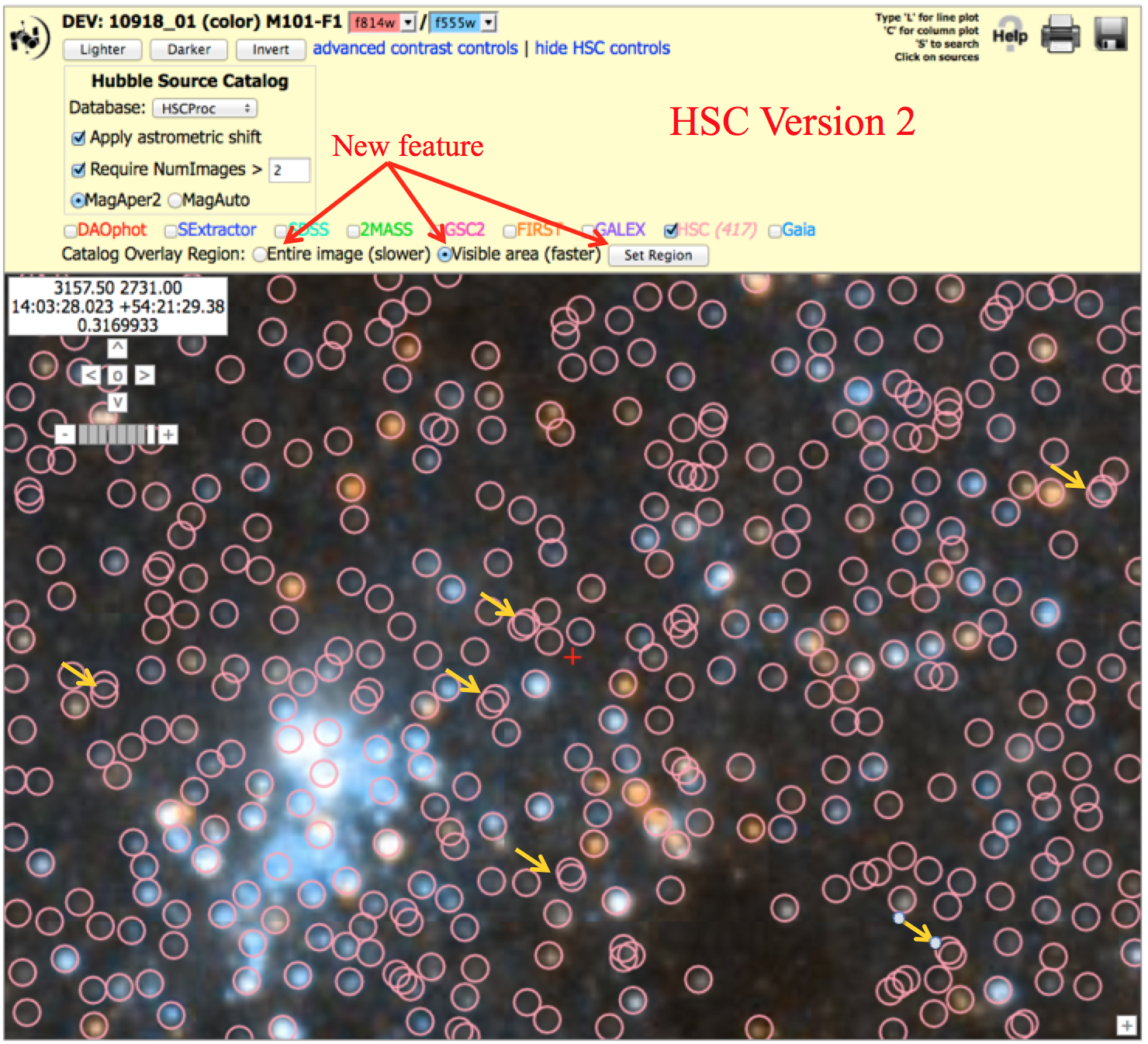

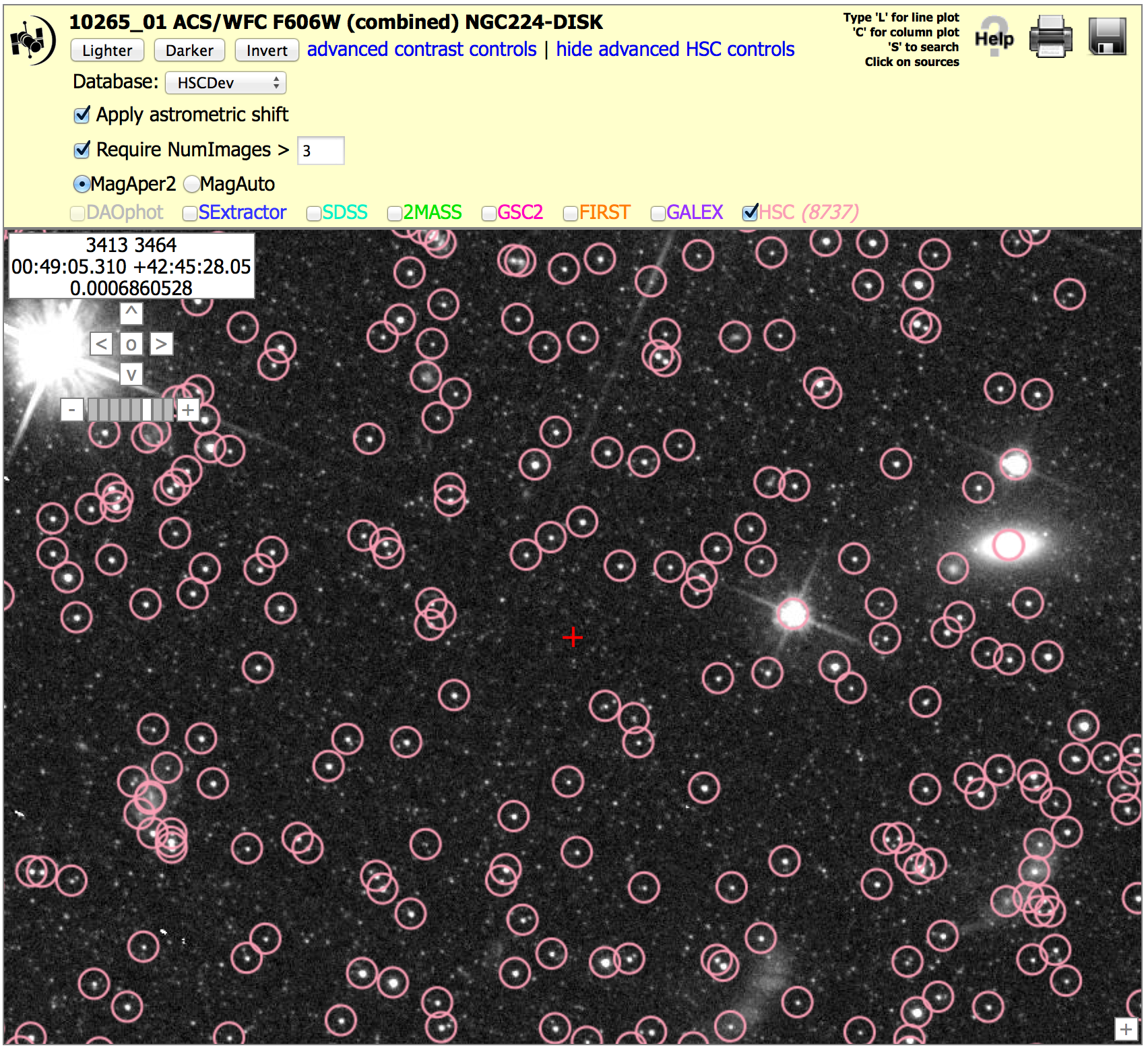

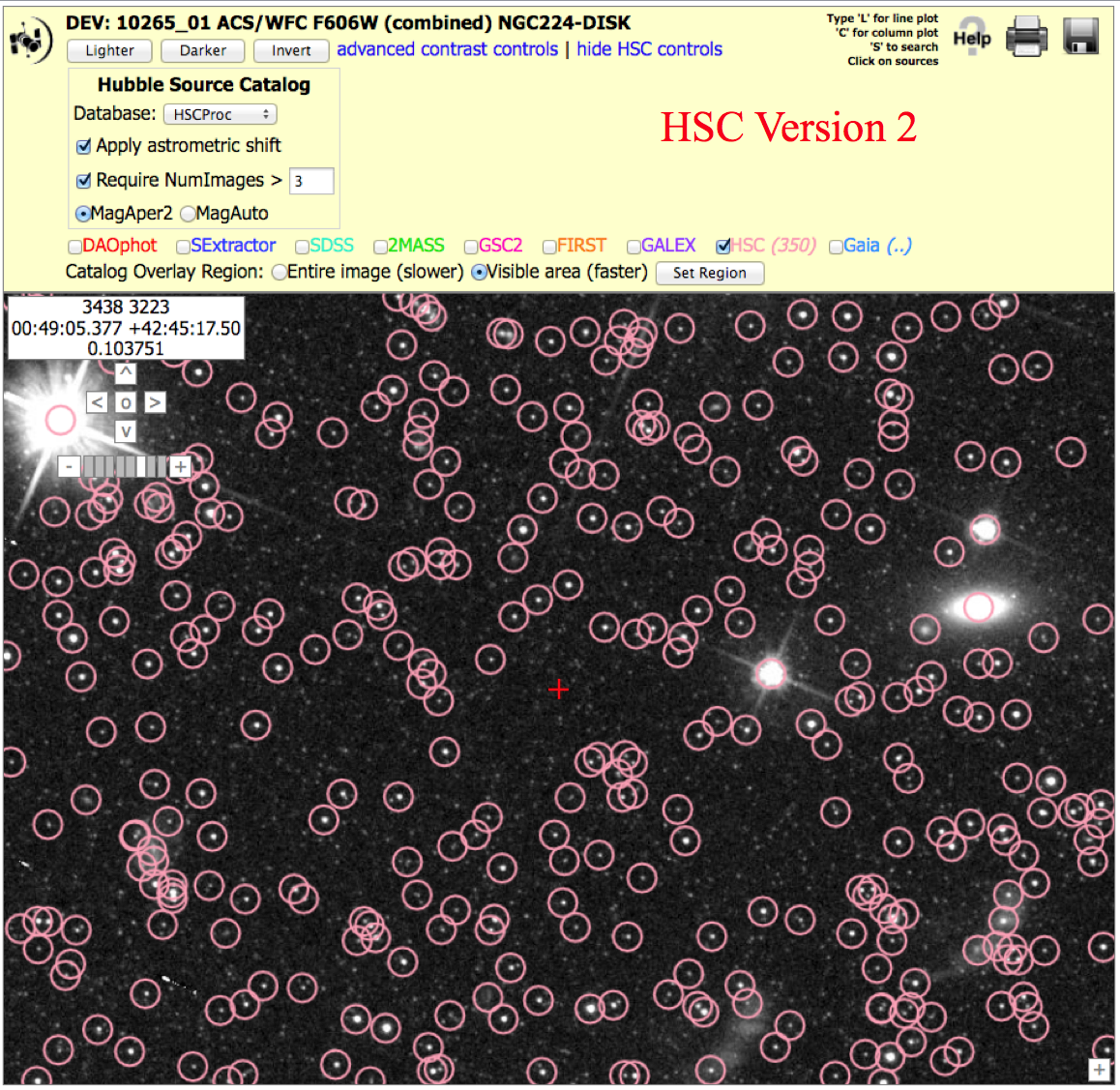

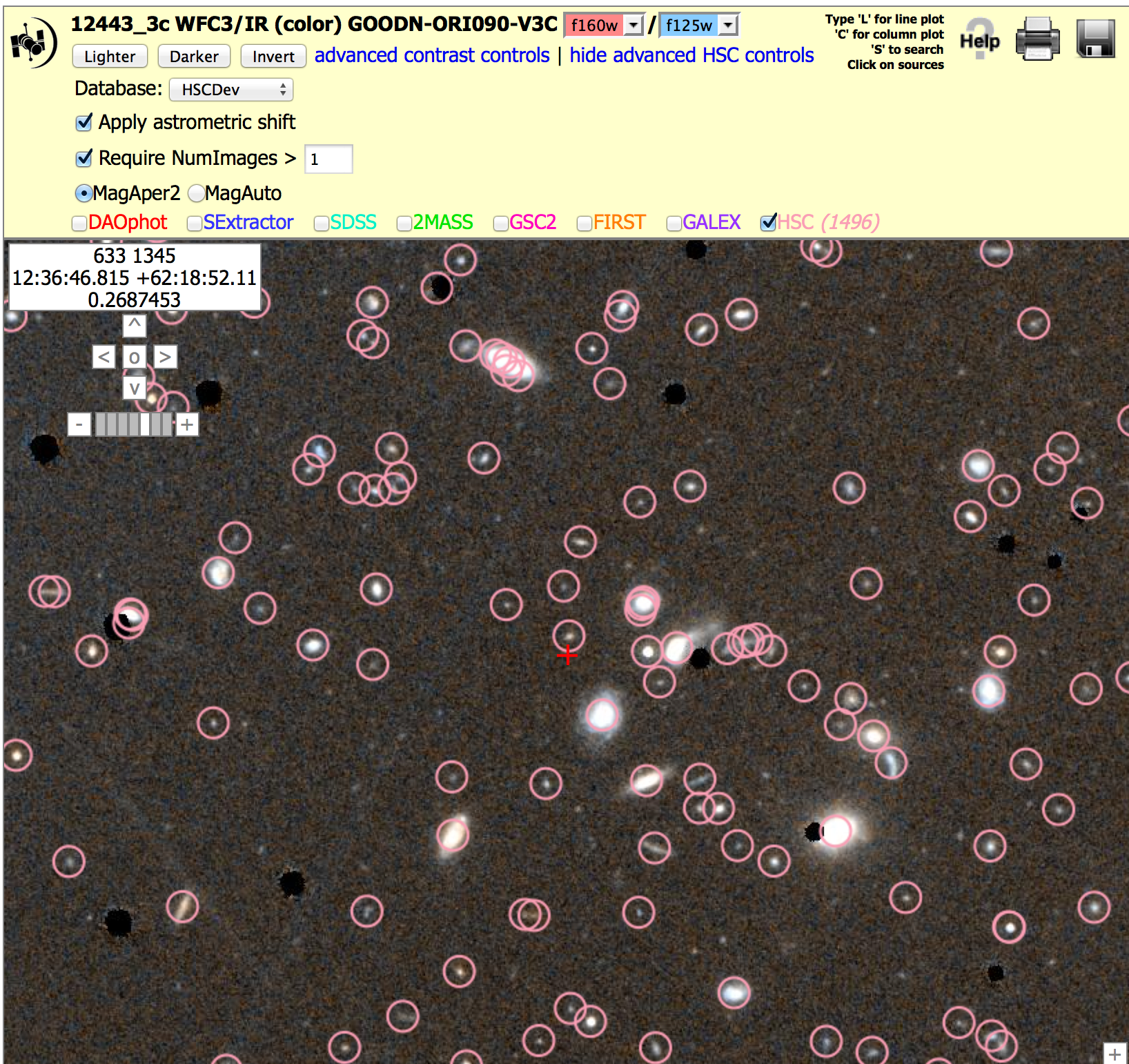

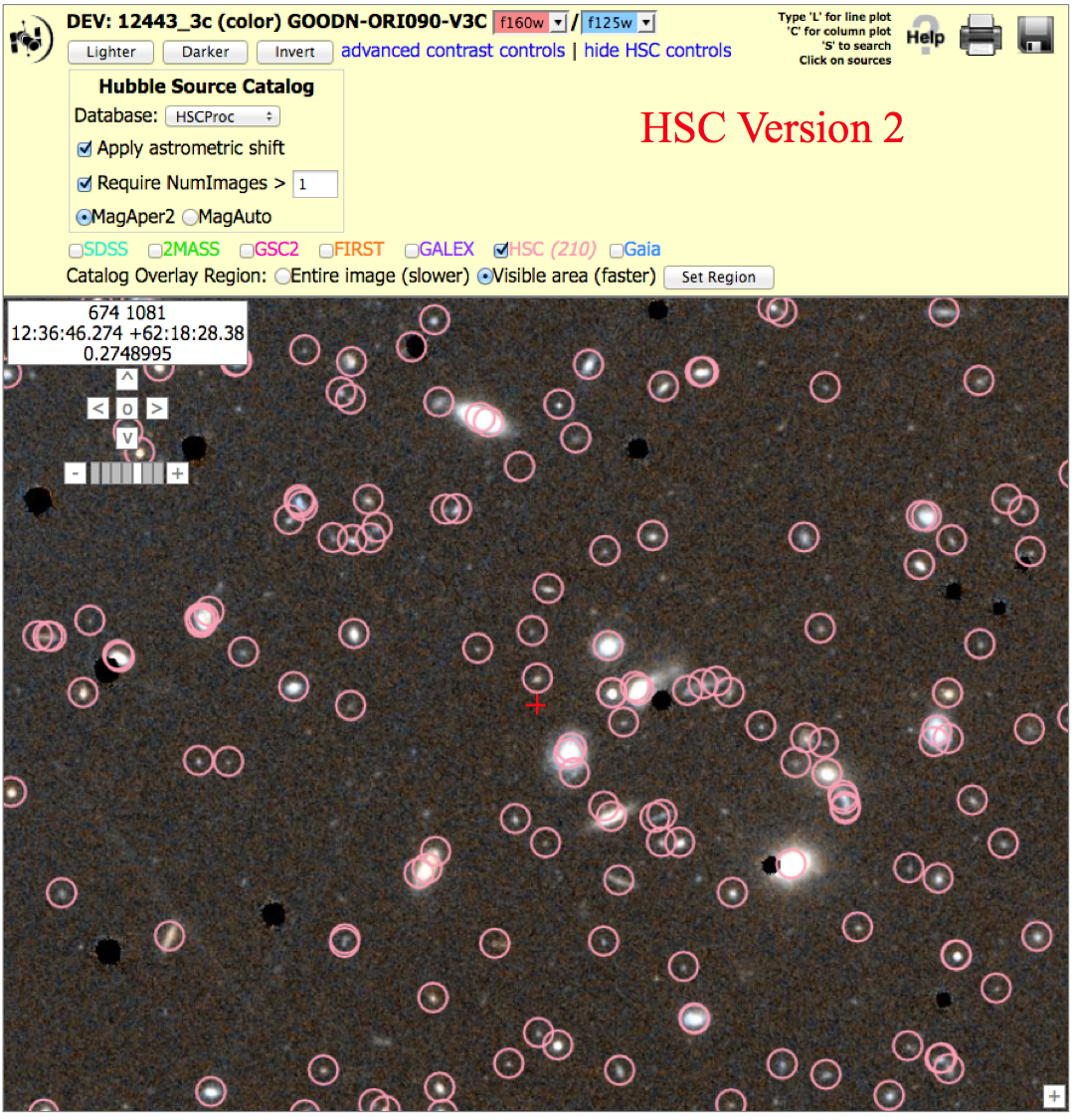

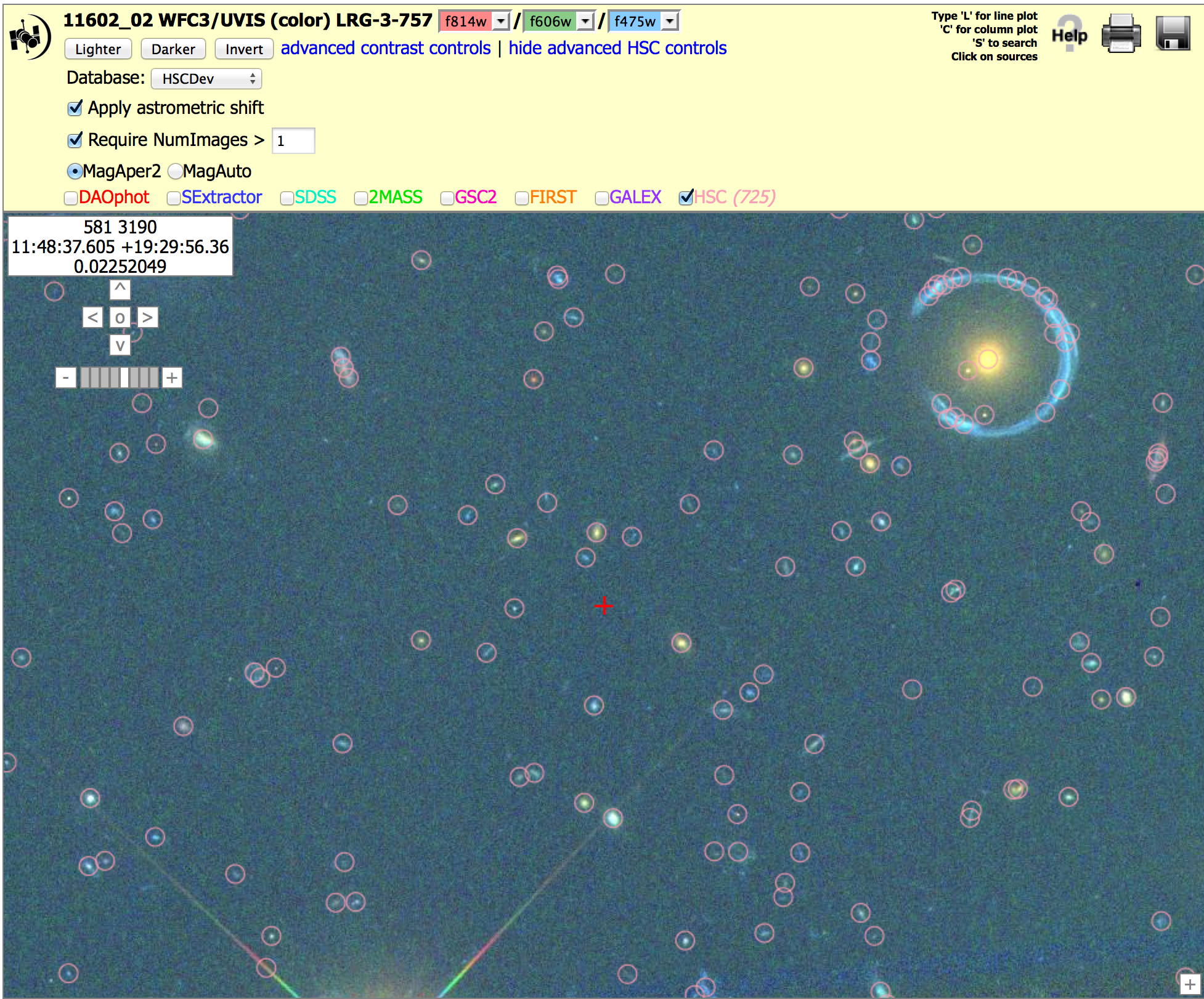

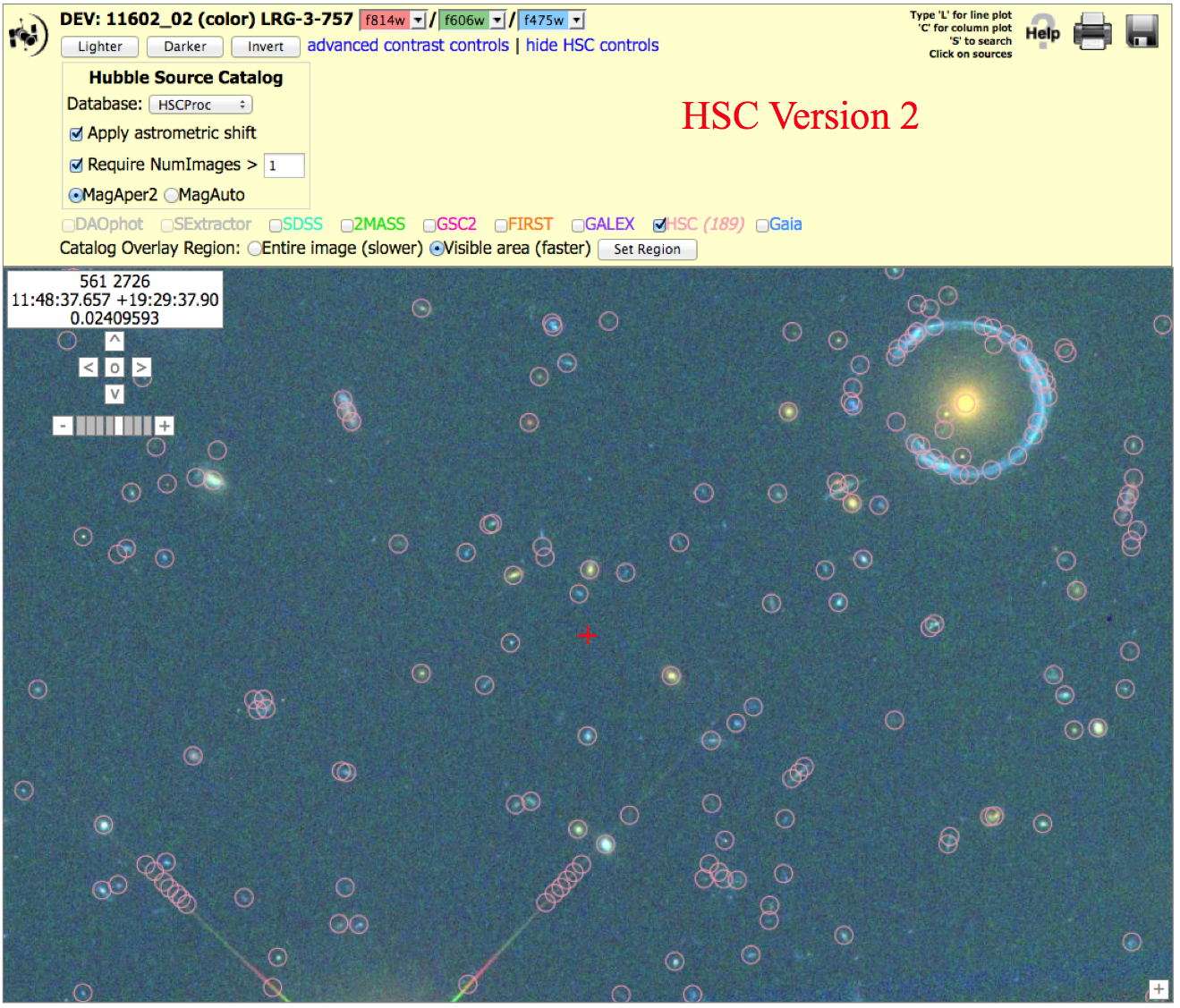

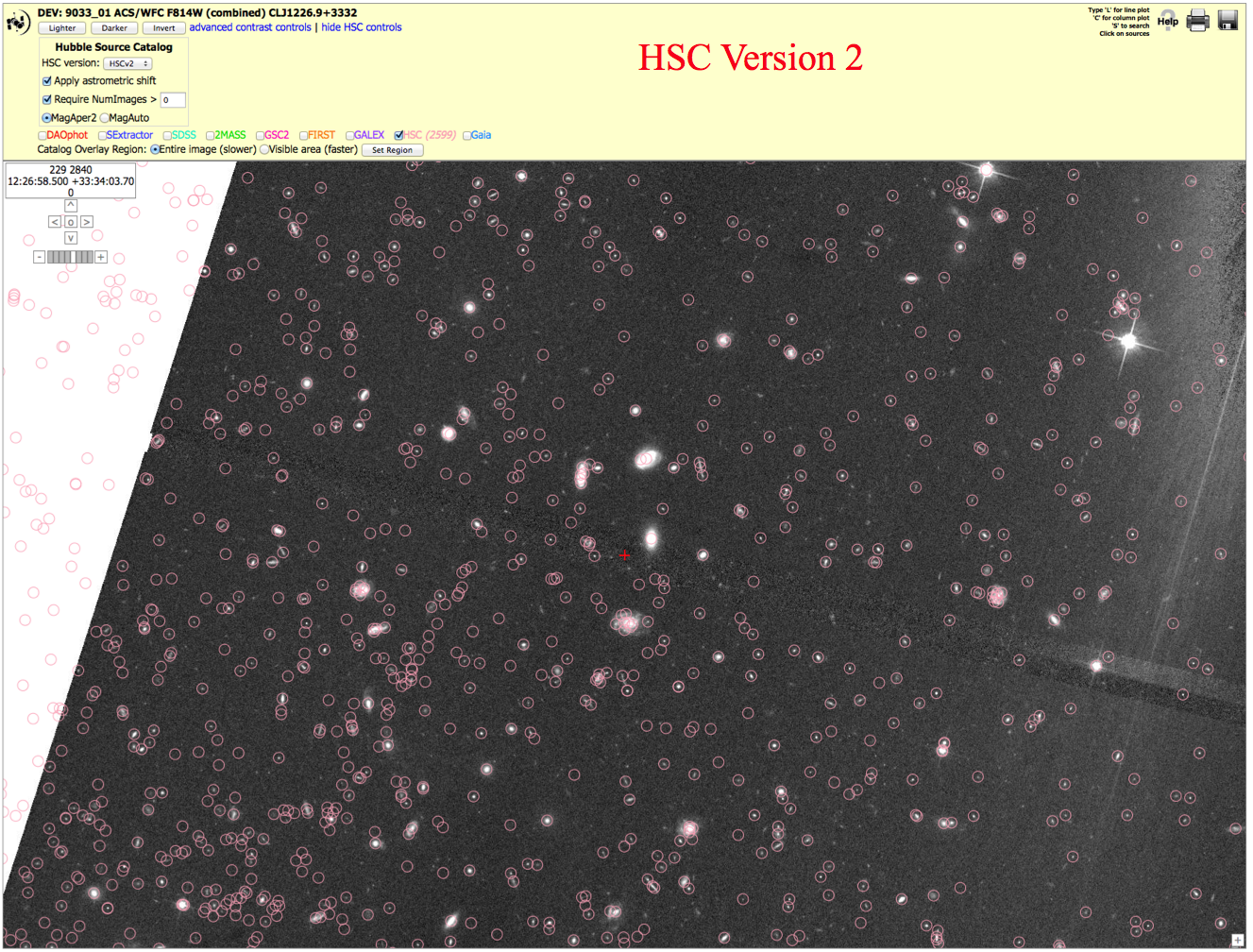

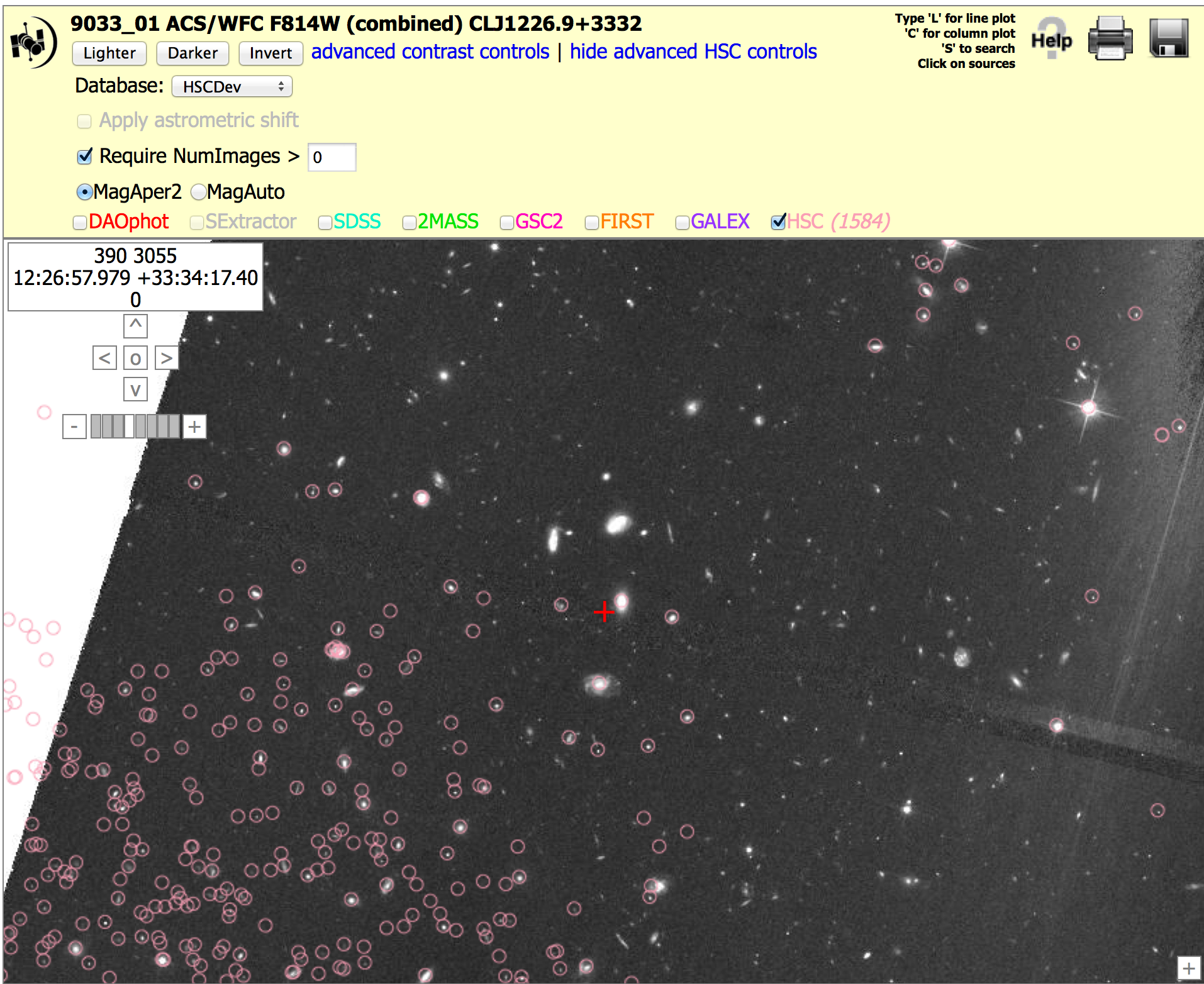

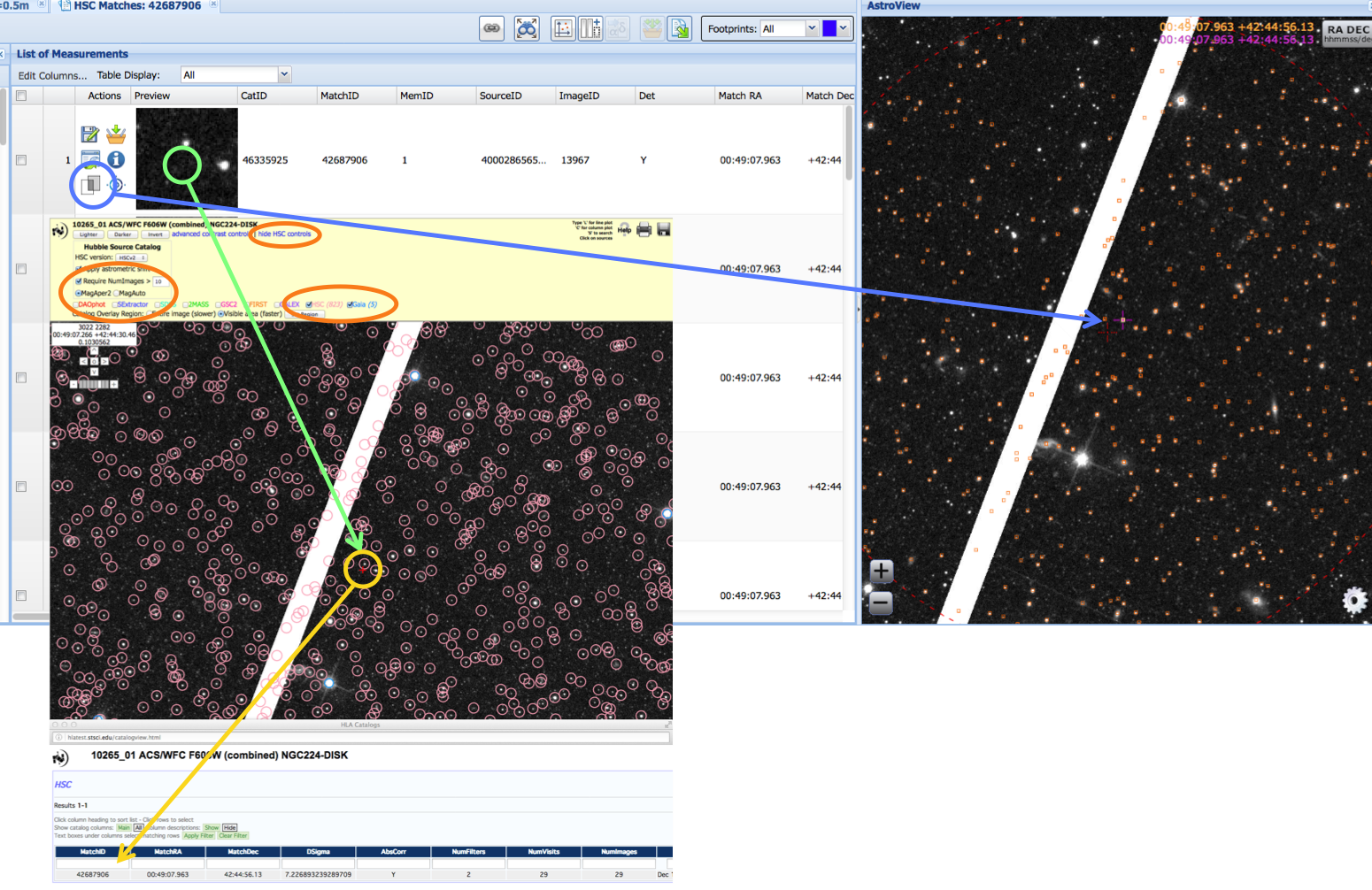

Doubling:

There are occasionally

cases where not all the detections of the same

source are matched together into a single

objects. In these cases, more than one match

ID is assigned to the object, and two pink

circles are generally seen at the highest

magnification in the display, as shown by the

yellow arrows in the example. Most of

these are very faint objects, and the primary

reason there are more in version 2 compared to

version 1 is the deeper ACS source lists.

There are occasionally

cases where not all the detections of the same

source are matched together into a single

objects. In these cases, more than one match

ID is assigned to the object, and two pink

circles are generally seen at the highest

magnification in the display, as shown by the

yellow arrows in the example. Most of

these are very faint objects, and the primary

reason there are more in version 2 compared to

version 1 is the deeper ACS source lists.

These "double objects" generally have very different numbers of images associated with the two circles. Hence, this problem can often be handled by using the appropriate value of NumImages to filter out one of the two circles. For example, using Numimages > 9 for this field removes essentially all of the doubling artifacts, at the expense of loosing the faintest 25 % of the objects (see figure on right )

Mismatched Sources:

The HSC matching algorithm uses a

friends-of-friends algorithm, together with a Bayesian method to break

up long chains (see Budavari &

Lubow 2012)

to match detections from different images. In some cases the

algorithm has been too aggressive and two very close, but physically

separate objects, have been matched together. This is rare, however.

Bad Images:

Images taken

when Hubble has lost lock on guide stars (generally after an

earth occultation) are the primary cause of bad images. We

attempt to remove these images from the HLA, but

occasionally a bad image is missed and a corresponding bad

source list is generated. A document showing these and other examples of

potential bad images can be found at HLA Images FAQ.

If you come across what you believe

is a bad image please inform us at archive@stsci.edu

-

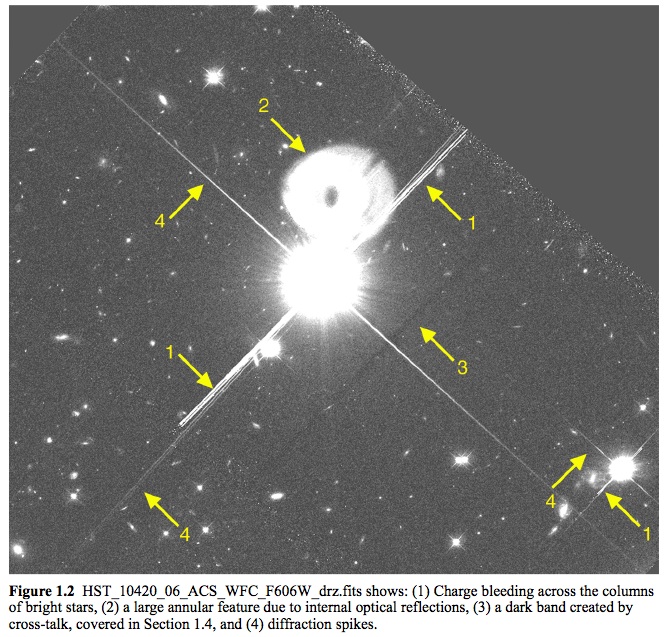

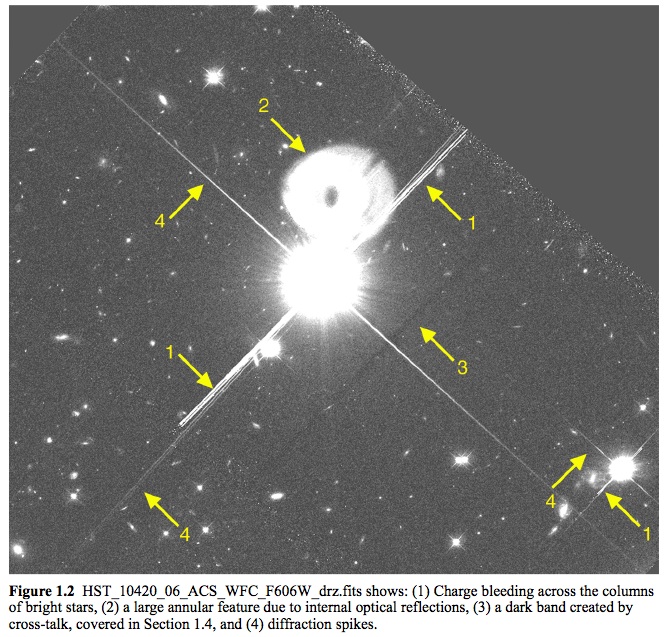

Is there a summary of known image anomalies?

Yes - HLA Images FAQ.

Here is a figure from the document showing a variety of artifacts associated with very bright objects.

-

How good is the photometry for the HSC?

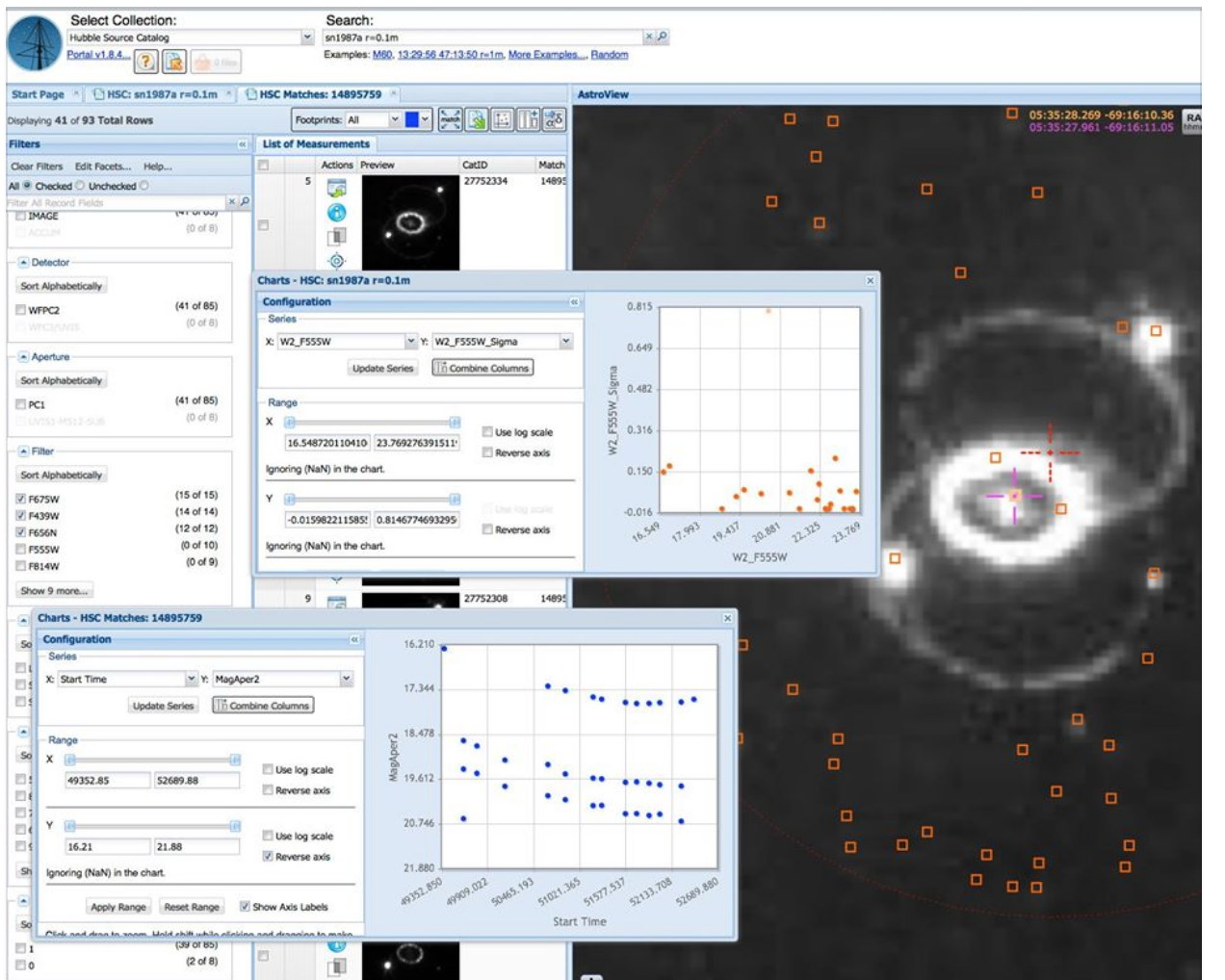

NOTE: This section is based on version 1 of the HSC, and more specifically, the studies performed for the (Whitmore et al. 2016) paper. Similar ongoing analysis of the version 2 database will be added to this section in the future.

Due to the diversity of the Hubble data, this is a hard

question to answer. We have taken a three-pronged approach to address it.

We first examine a few specific datasets, comparing magnitudes

directly for repeat measurements. The second approach is to

compare repeat measurements in the full database. While this provides

a better representation of the entire dataset, it can also be

misleading since the tails of the distributions are generally caused

by a small number of bad images and bad source lists. The third

approach is to produce a few well-known astronomical figures (e.g.,

color-magnitude diagram for the outer disk of M31 from Brown et al

2009) based on HSC data, and compare them with the original

study.

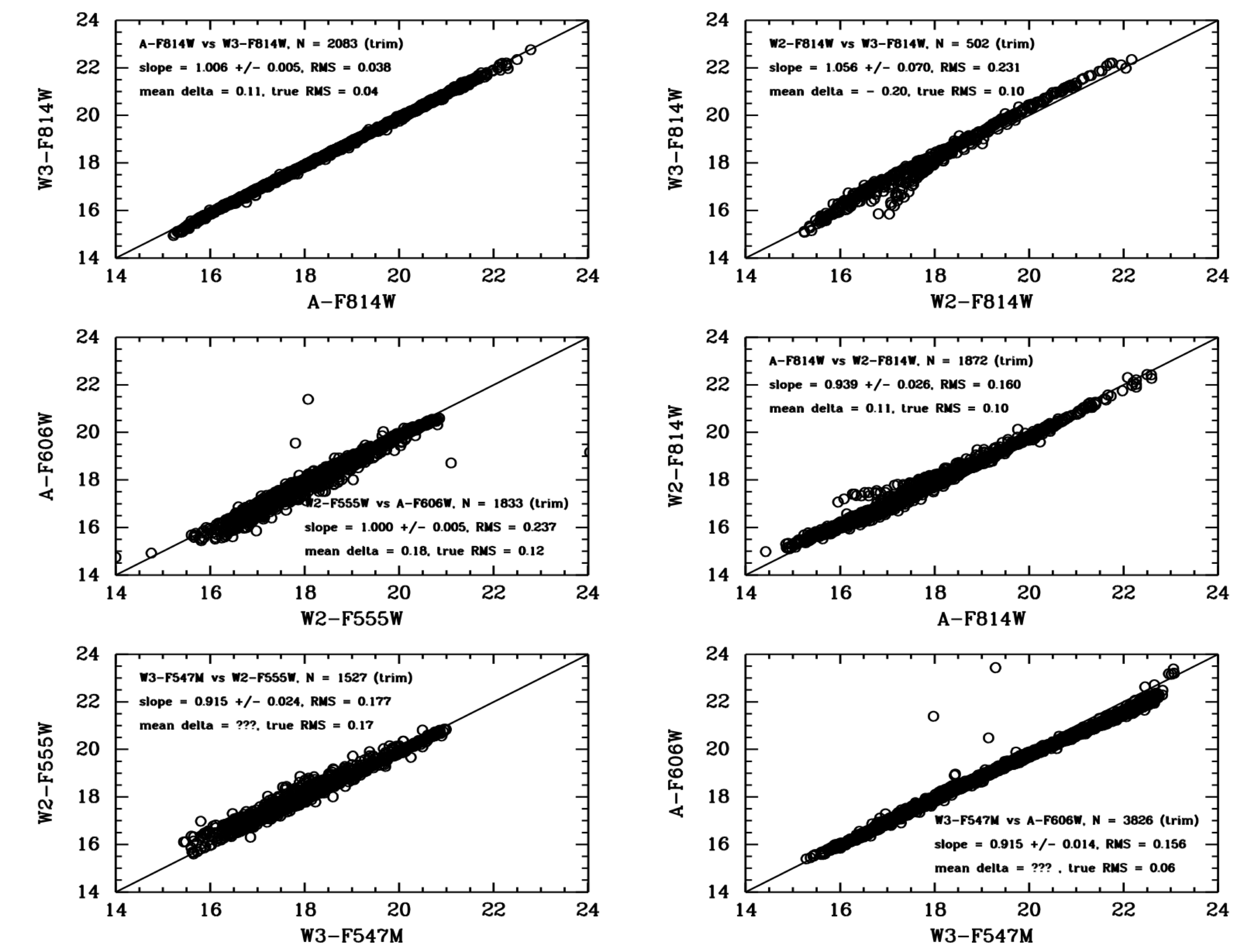

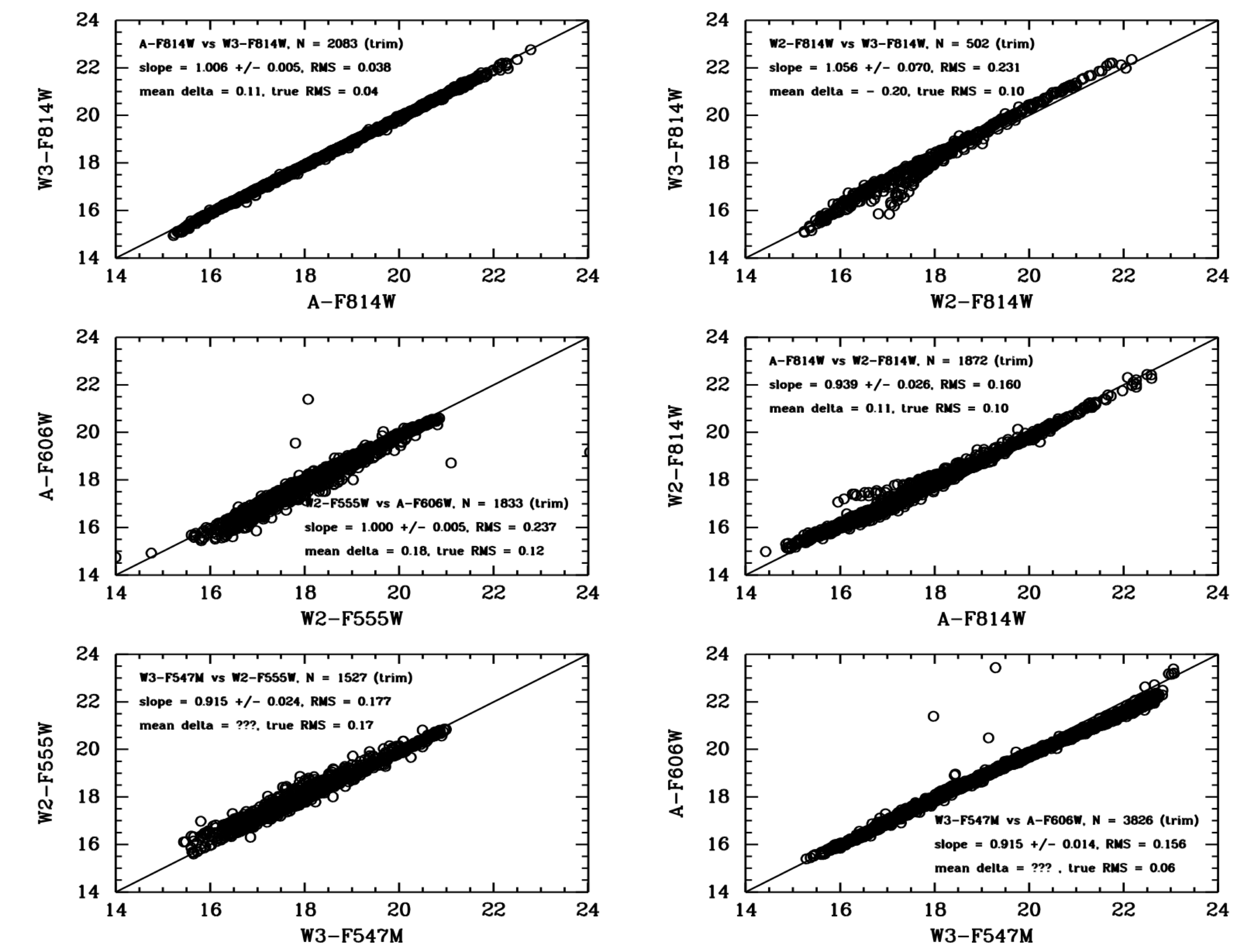

For our first case we examine the repeat measurements in the globular

cluster M4. For this study, as well as the next two, we use MagAper2 values (i.e., aperture magnitudes),

which are the default

for the HSC. In the last

example (extended galaxies) we use MagAuto values.

The figure shows that in general there is a good

one-to-one agreement for repeat measurements using different

instruments with similar filters. Starting with the best case,

A-F814W vs W3-F814W shows excellent results, with a slope near unity,

values of RMS around 0.04 magnitudes, and essentially no outliers.

However, an examination of the W2-F814W vs. W3-F814W and A-F814W vs

W2-F814W comparisons show that there is an issue with a small fraction

of the WFPC2 data. The short curved lines deviating from the 1-to-1

relation show evidence of the inclusion of a relatively small number

of slightly saturated star measurements (i.e., roughly 5 % of the

data).

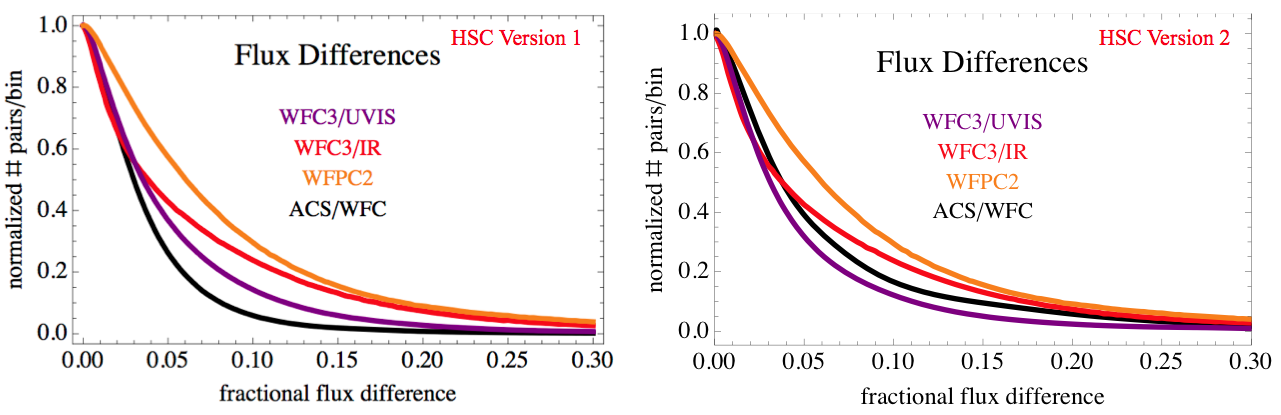

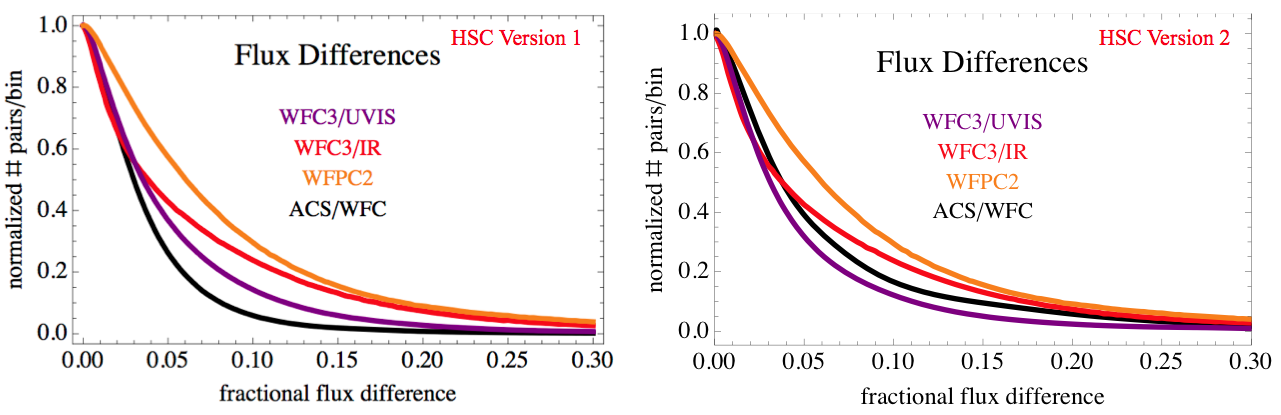

We now turn to our second approach; looking at repeat measurements

for the entire HSC database. The following figures shows the

distribution of comparisons between independent photometric

measurements of pairs of sources that belong to the same match and

have the same filter in the HSC for Version 1 and Version 2. The x-axis is the

flux difference ratio defined as

abs(flux1-flux2)/max(flux1,flux2). The y-axis is the number of sources

per bin (whose size is a flux difference ratio of 0.0025) that is

normalized to unity at a flux difference of zero.

The main point of this figure is to demonstrate that typical

photometric uncertainties in the HSC are better than 0.10 magnitude for a majority of the data.

We also note that the curves are quite similar in Versions 1 and 2, with the ACS/WFC becoming somewhat higher

at faint magnitudes

in Version 2 due to the inclusion of deeper source lists.

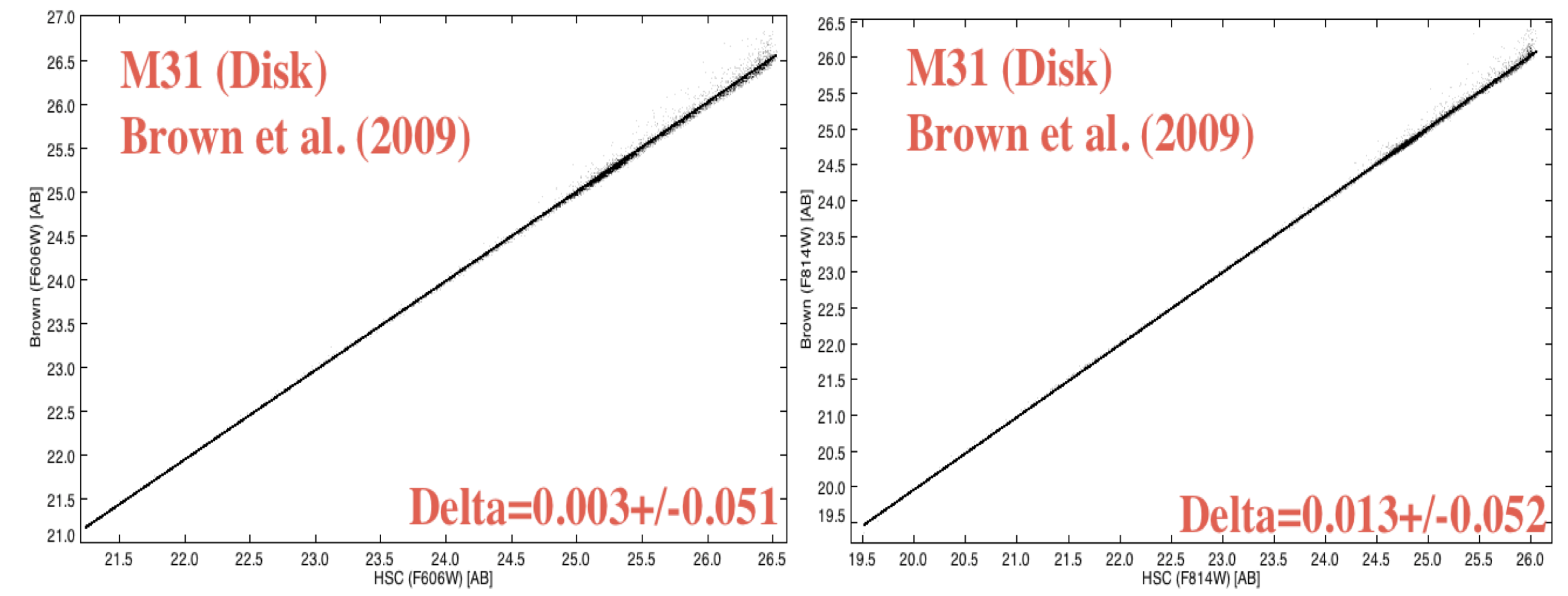

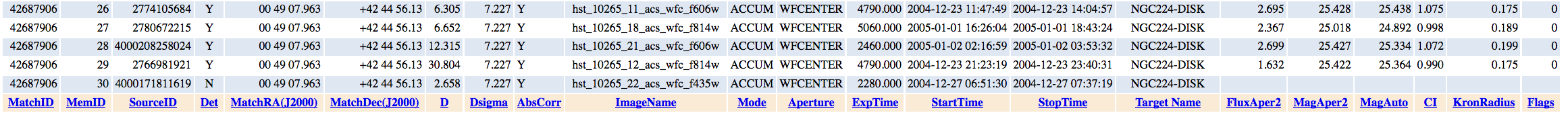

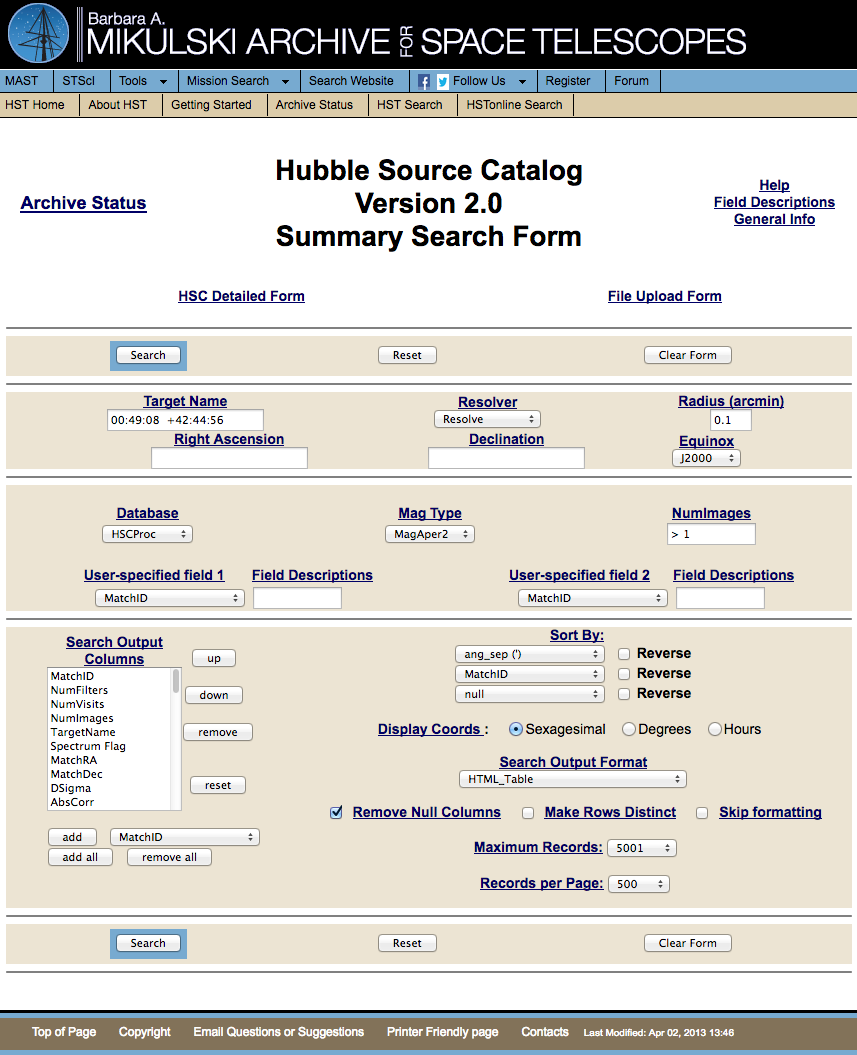

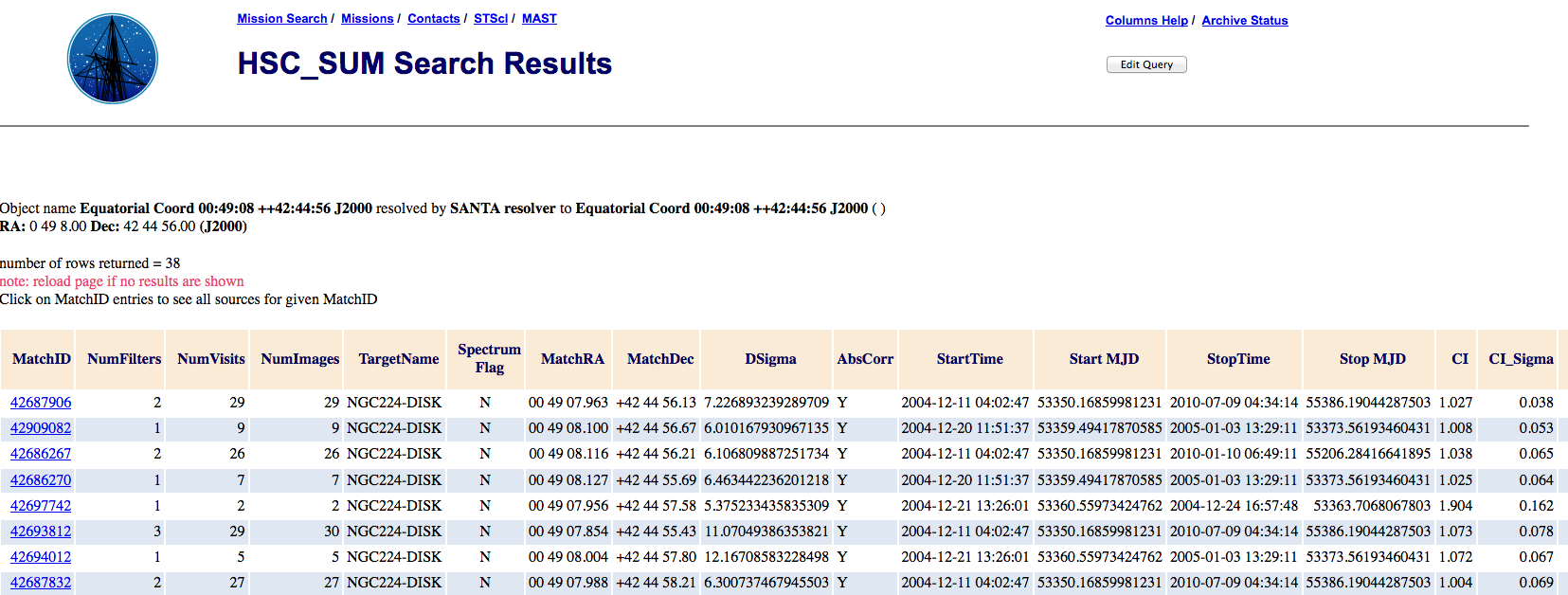

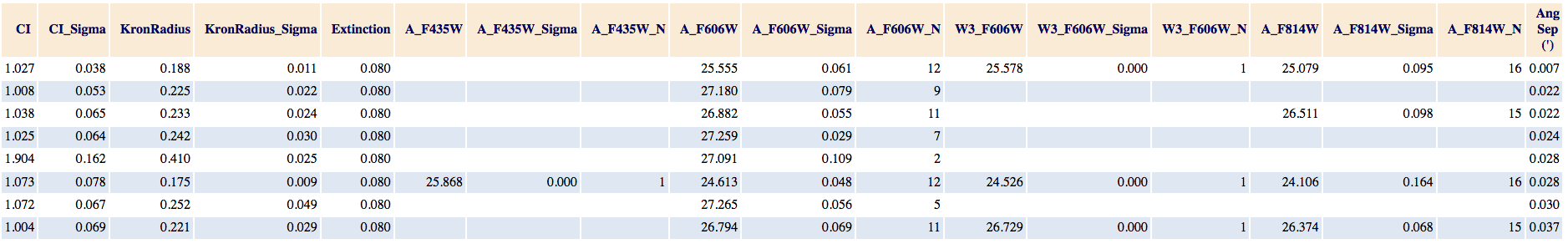

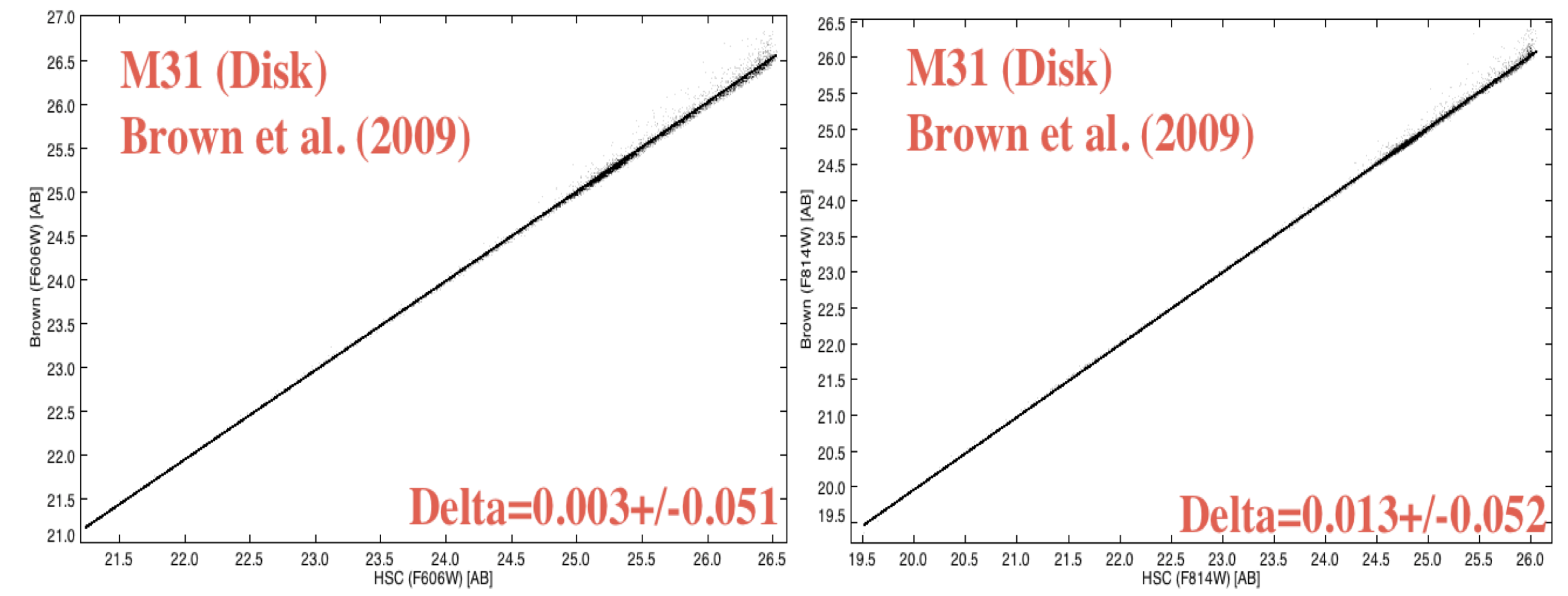

Next we compare HSC data with other studies.

The case shown below is a comparison between the HSC and the

Brown et al. (2009) deep

ACS/WFC observations of the outer disk of M31 (proposal = 10265).

The observing plan for

this proposal resulted in 60 separate one-orbit visits (not typical of

most HST observations), hence provide an excellent opportunity for

determining the internal uncertainties by examining repeat

measurements.

In the range of overlap, the agreement is quite good, with zeropoint

differences less than 0.02 magnitudes (after corrections from ABMAG to

STMAG and from aperture to total magnitudes) and mean values of the

scatter around 0.05 mag. However, the Brown study goes roughly 3 magnitudes deeper,

since they work with an image made by combining all 60 visits. More details are available in

HSC Use Case #1,

and in (Whitmore et al. 2016).

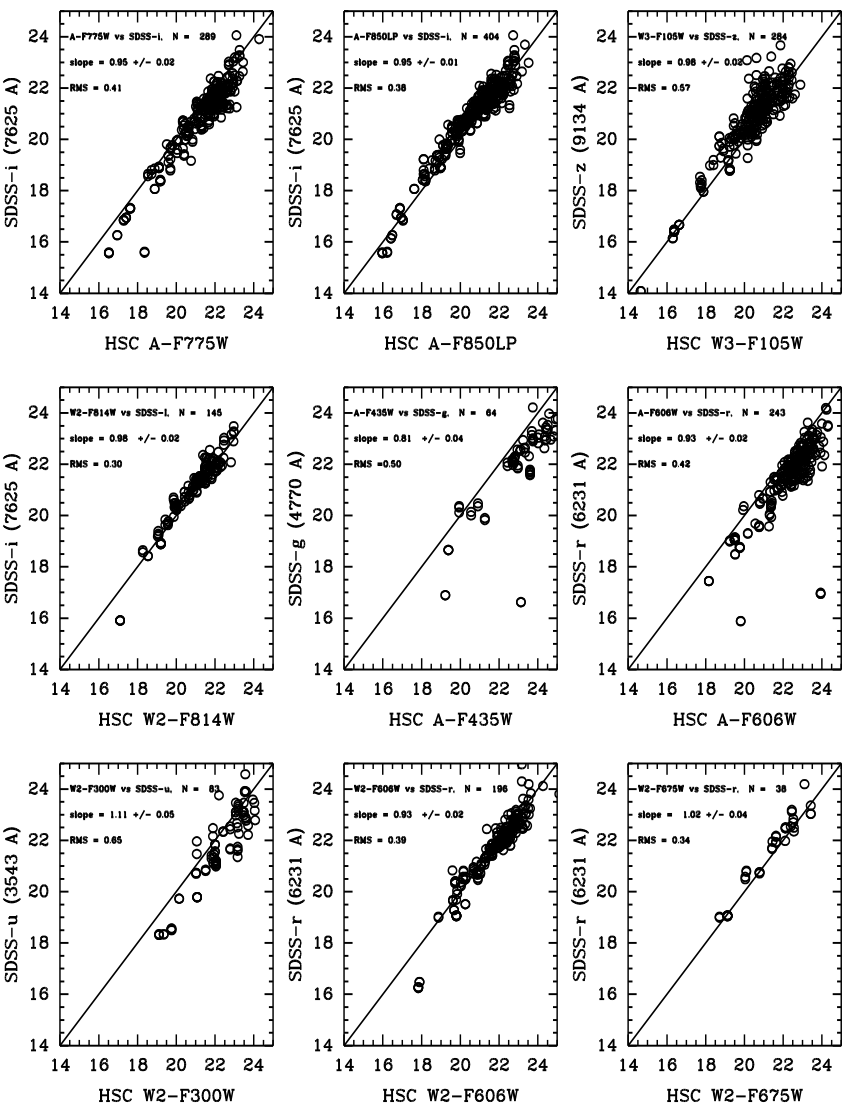

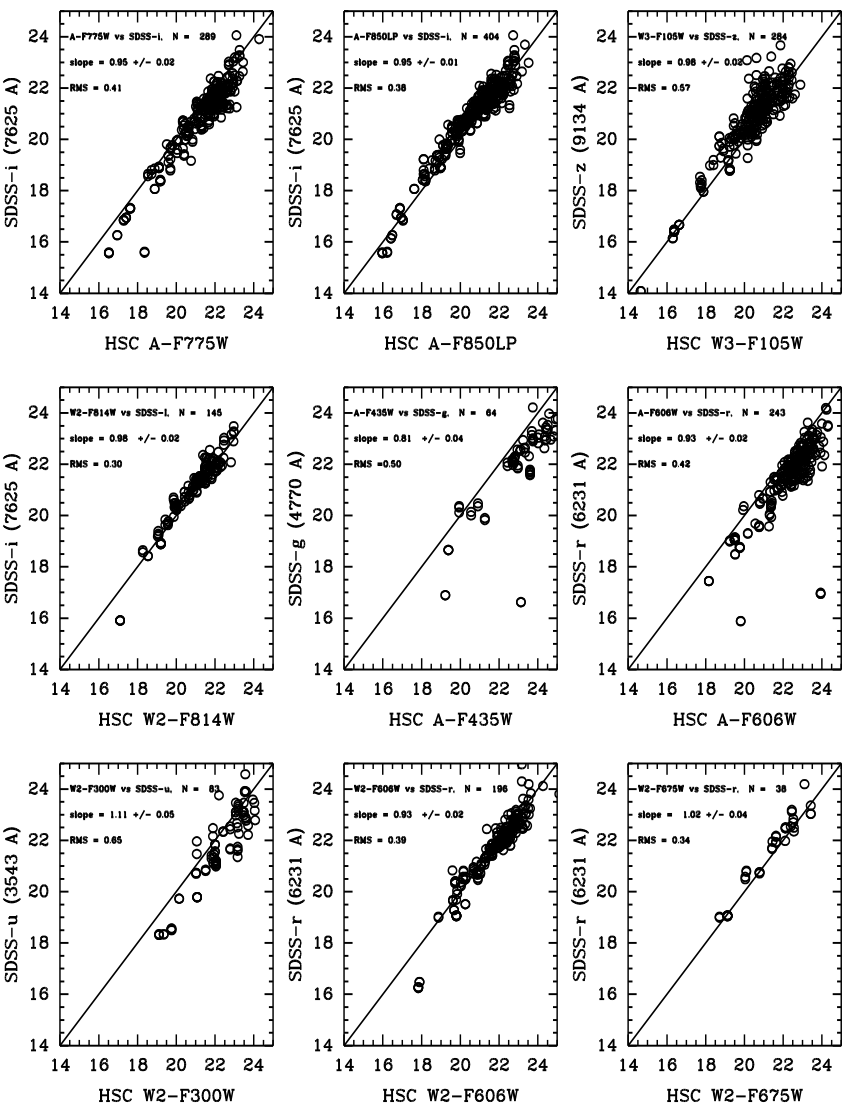

For our final photometric quality example we compare the HSC with

ground-based observations from the Sloan Digital Sky Survey (SDSS)

observations of galaxies in the Hubble Deep Field. Using MagAuto

(extended object photometry) values in this case rather than MagAper2

(aperture magnitudes), we find

generally good agreement with SDSS measurements. The scatter is typically a few

tenths of a magnitude; the offsets are roughly the same and reflect

the differences in photometric systems, since no transformations have

been made for these comparisons. The best comparison is between

A_F814W and SDSS-i. This reflects the fact that these two

photometric systems are very similar, hence the transformation is

nearly 1-to-1 .

-

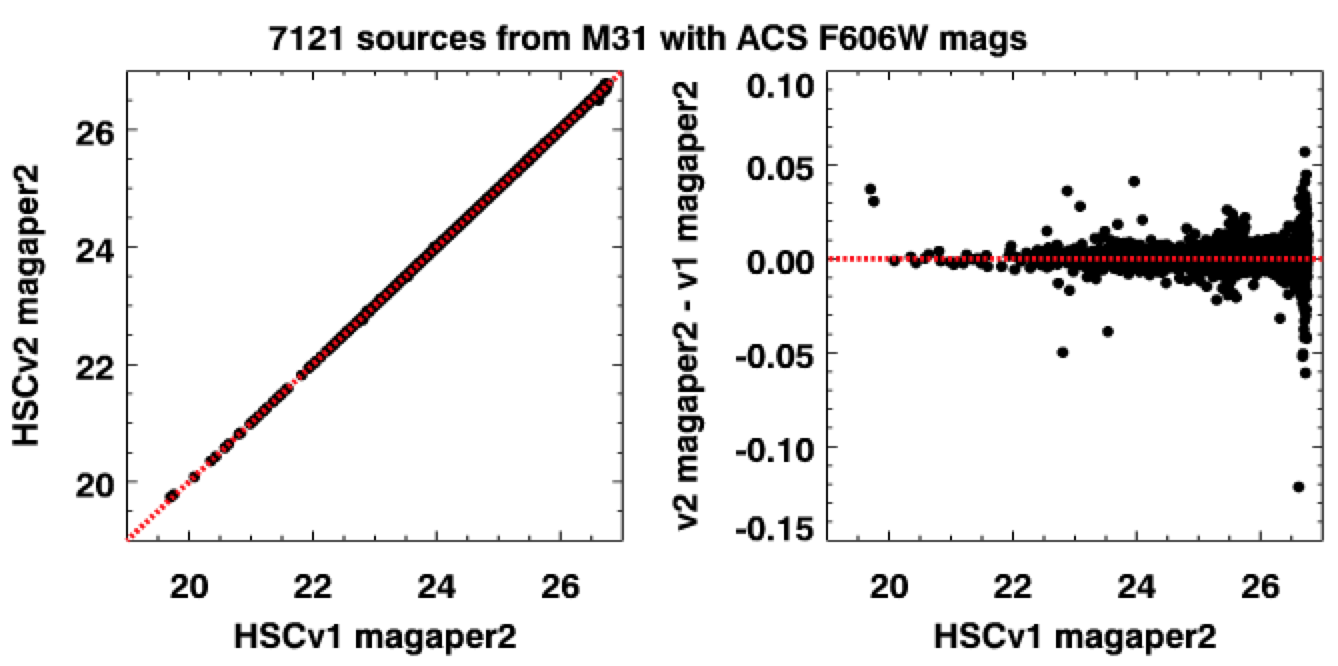

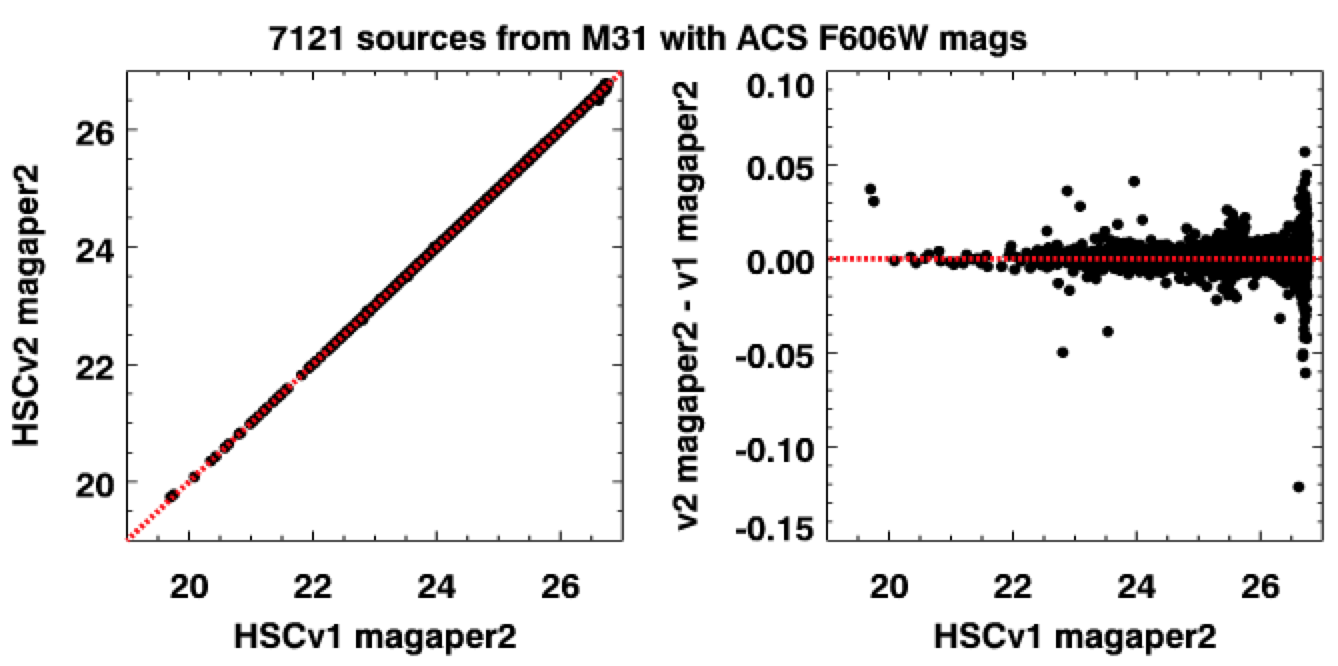

How does the HSC version 2 photometry compare with version 1?

In this figure we compare photometry

from version 2 and version 1 in

a field in M31. This figure was made by requiring

the same individual measurements are present in

both versions, so that we are comparing "apples with apples". We find that the agreement is very

good, with typical uncertainties of a few hundreds of a magnitude.

Similar comparisons (e.g., in M87 where most

of the point-like sources are actually resolved

globular clusters) show similar results.

In this figure we compare photometry

from version 2 and version 1 in

a field in M31. This figure was made by requiring

the same individual measurements are present in

both versions, so that we are comparing "apples with apples". We find that the agreement is very

good, with typical uncertainties of a few hundreds of a magnitude.

Similar comparisons (e.g., in M87 where most

of the point-like sources are actually resolved

globular clusters) show similar results.

If we relax the constraint that the individual measurements be

available in both versions we find slightly worse agreement. In

particular, we find a tendency for version 2 to have larger values of

the RMS sigma values, as included in the summary form. This is

primarily due to the inclusion of fainter (noiser) measurements in version

2. However, we find that the mean, and especially the medians, are not

greatly affected.

A more detailed description of these comparisons will be included in

this section in the future.

-

What is the "Normalized" Concentration Index and how is it calculated??

In version 1, one of the items in the List of "Known Problems" was that raw values of the Concentration Index (the difference in magnitude for aperture photometry using magaper1 and magaper2) were added together to provide a single mean value in the summary form. The reason this is a problem is that

each instrument/filter combination has a different normalization. For example, the

Peak of Concentration Index for Stars and Aperture Correction Table

shows the raw values of the

concentration index for stars is 1.08 for the ACS_WFC_F606W filter,

0.88 for WFC3_UVIS_F606W and 0.86 for

WFPC2_F606W. Similarly, the raw values of the

concentration index vary as a function of

wavelength for some detector. For example,

WFC3_F110W has a peak value of 0.56 while

WFC3_160W has a value of 0.67.

Hence, averaging the mean values of the Concentration Index together in Version 1

resulted in values that were not always very useful, and were often

misleading.

In version 2 we have corrected this by

normalizing (dividing by) the value of the peak of the raw concentration index for stars using observations from each instrument and

filter, as provided

in the table listed above. The values are then averaged together to

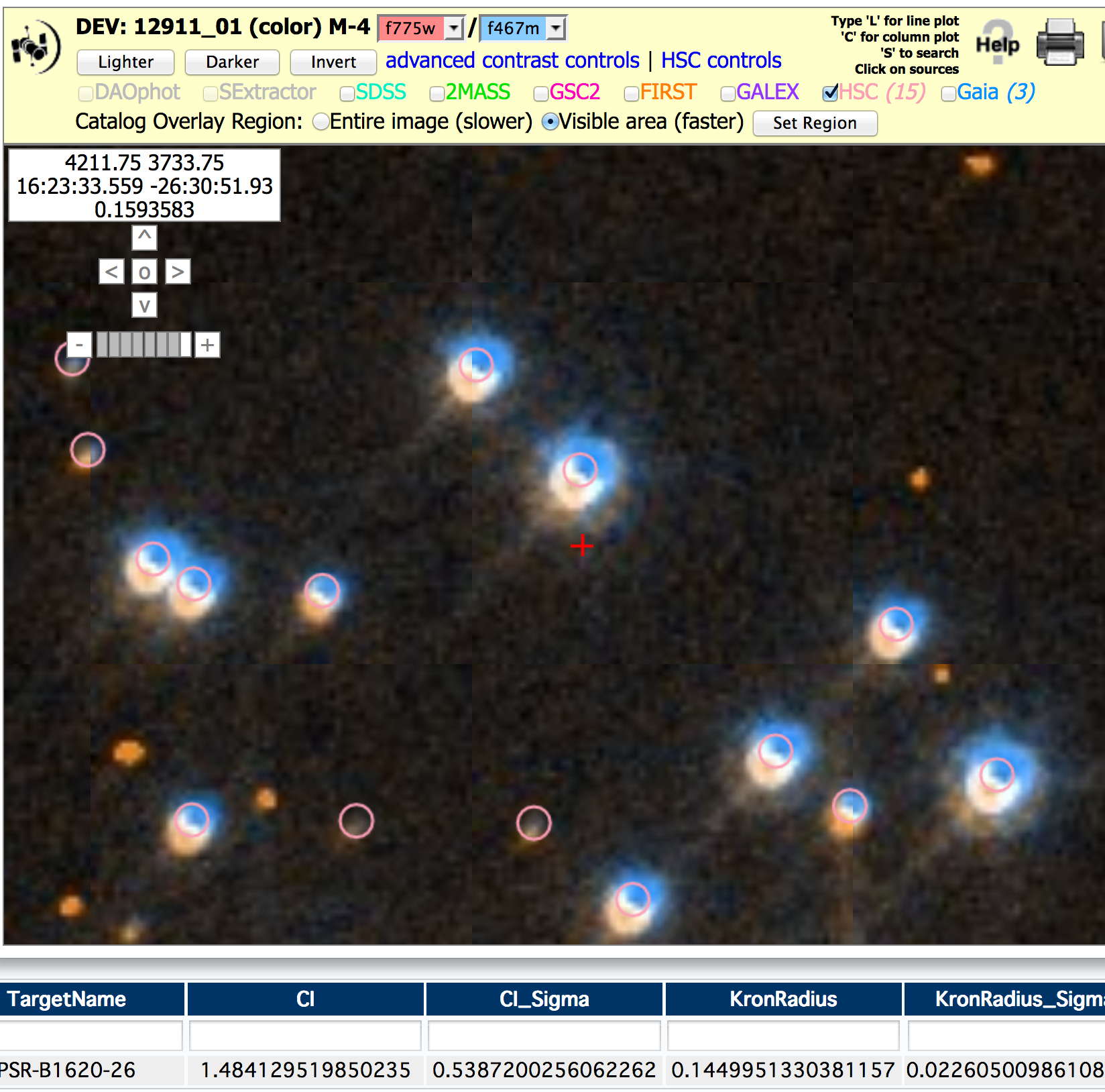

provide an estimate of the "Normalized" Concentration Index, which is listed as the value of CI in

version 2 of the

summary form.

Hence, objects with values of CI ~ 1.0 are likely to

be stars while sources with much larger values of CI are likely to be

extended sources, such as galaxies. There are cases where this is still not true (e.g., saturated stars, cosmic rays, misaligned exposures within a visit, ...), hence caution is still required when using values of CI.

-

How good is the astrometry for the HSC?

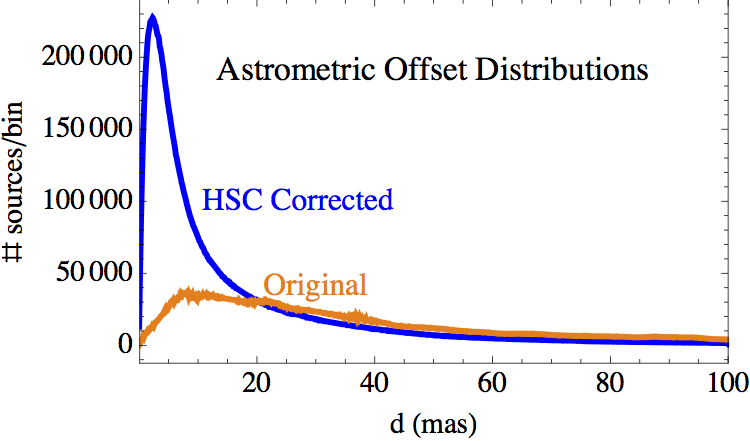

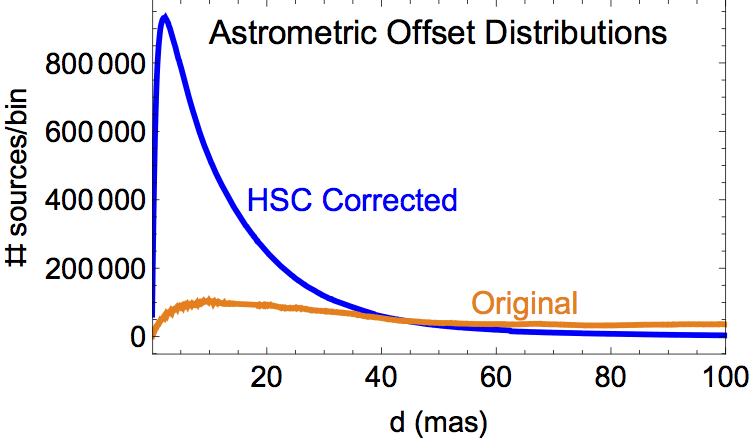

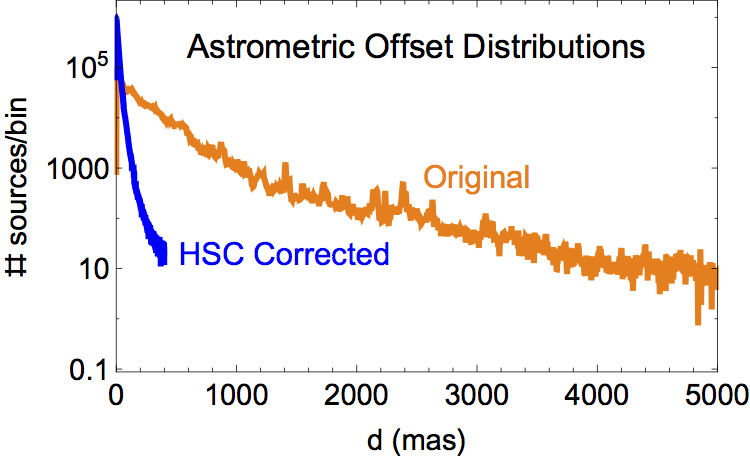

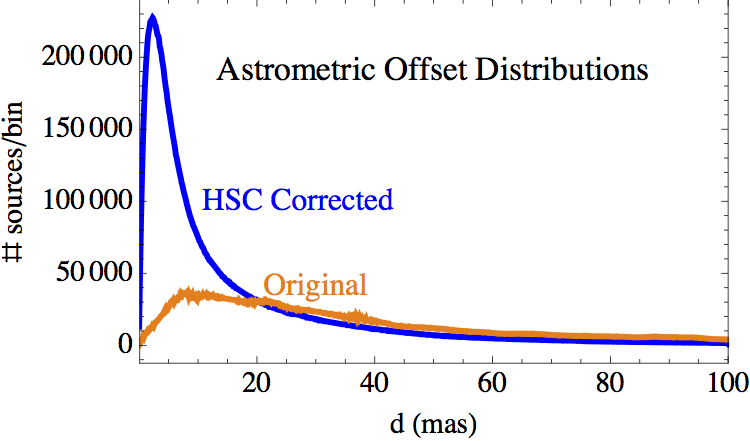

This plot from version 1 shows (in blue) the distribution of the relative astrometric

errors in the HSC corrected astrometry, as measured by the positional

offsets of the sources contributing to multi-visit matches from their

match positions. The

units for the x-axis are milli-arcsec (mas). The y-axis is the number

of sources per bin that is 0.1 mas in width. Plotted in orange is the corresponding

distributions of astrometric errors based on the original HST image

astrometry.

The peak

(mode) of the HSC corrected astrometric error distribution in HSC version 1 was 2.3 mas,

while the median offset was 8.0 mas. The original, uncorrected (i.e., current HST)

astrometry has corresponding values of 9.3 mas and 68 mas,

respectively.

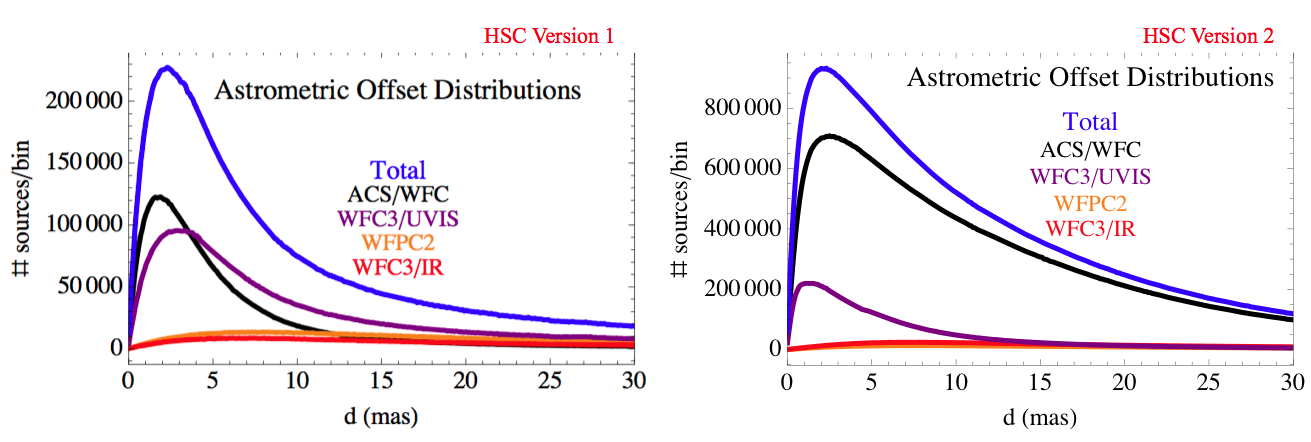

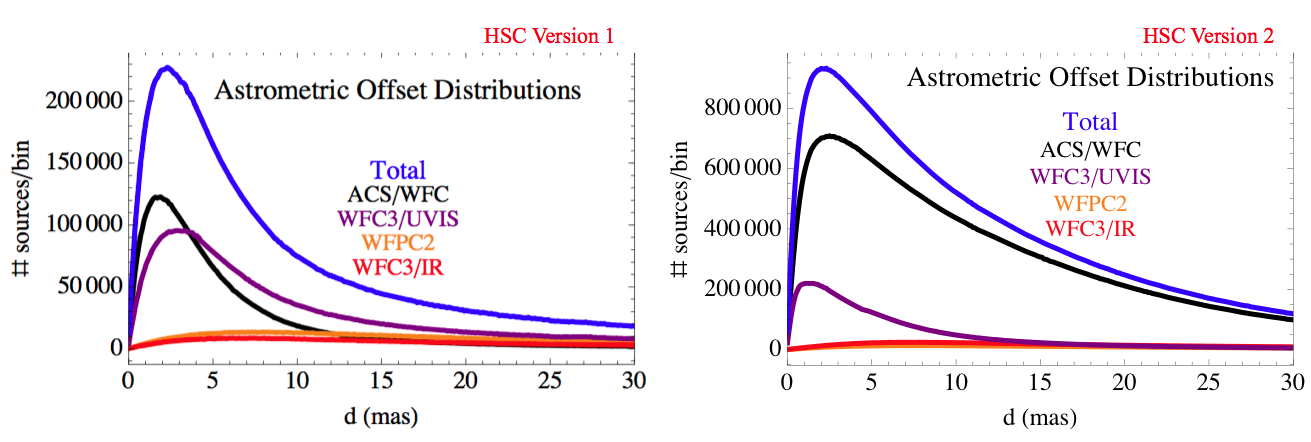

The following two figures show the corrected

astrometric offset distributions for the different instruments

for multi-visit matches in the HSC for version 1 and version 2.

The distribution is actually flatter for version 2, largely due to the inclusion of fainter

ACS sources with correspondingly larger pointing uncertainties. Also note the large increase in the number

of ACS/WFC sources, due both to the deeper source lists and the addtion of four more years of data!

As expected, the

instruments with smaller pixels (ACS [50 mas] and WFC3/UVIS [40 mas])

show the best astrometric accuracy, with a peak less than a few mas

and typical values less than 10 mas. On the other hand, the

instruments with larger pixels WFPC2 [100 mas on the WFC chips which

dominate the statistics] and WFC3/IR [130 mas], have much larger

astrometric uncertainties, with less than 10 mas peaks and typical

values that are less than 20 mas.

Several astrometric studies are currently being analyzed and will be added to this FAQ in the future.

-

How does the HSC compare with Gaia astrometry?

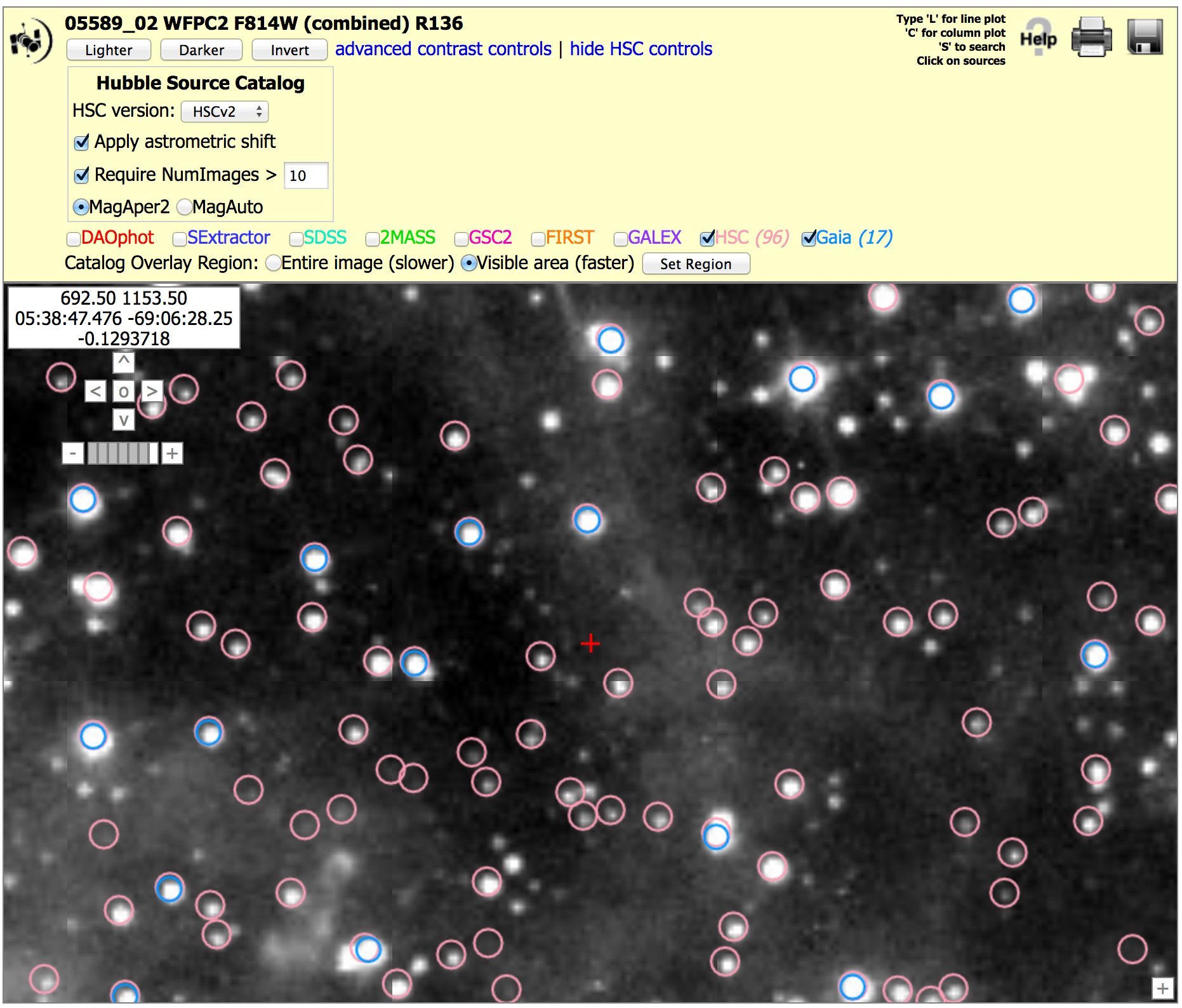

This figure shows a small portion in the outskirts of 30 Dor. There are 17 Gaia sources (blue) in this region and 96 HSC sources (pink) with NumImages > 10. There would be 279 HSC sources if we used a NumImages > 5 criteria to reach fainter sources. This figure shows good agreement in the positions of HSC relative to Gaia for this field. More detailed analysis are currently underway and will be added to this section in the future.

-

What are the future plans for the HSC?

-

Improve the WFPC2 sources lists using the newer ACS and WFC3 pipelines.

-

Address the known problems in HSC Version 2.

-

Integrate the Discovery Portal more fully with the HSC.

-

Use mosaic-based (rather than visit-based) source detection lists, and perform forced photometry at these locations on all images.

General

General

About Images and Matches

About Images and Matches

About Spectroscopic Cross Matching

About Spectroscopic Cross Matching

About Accessing the HSC

About Accessing the HSC

About Quality

About Quality

About Use Cases and Documentation

About Use Cases and Documentation

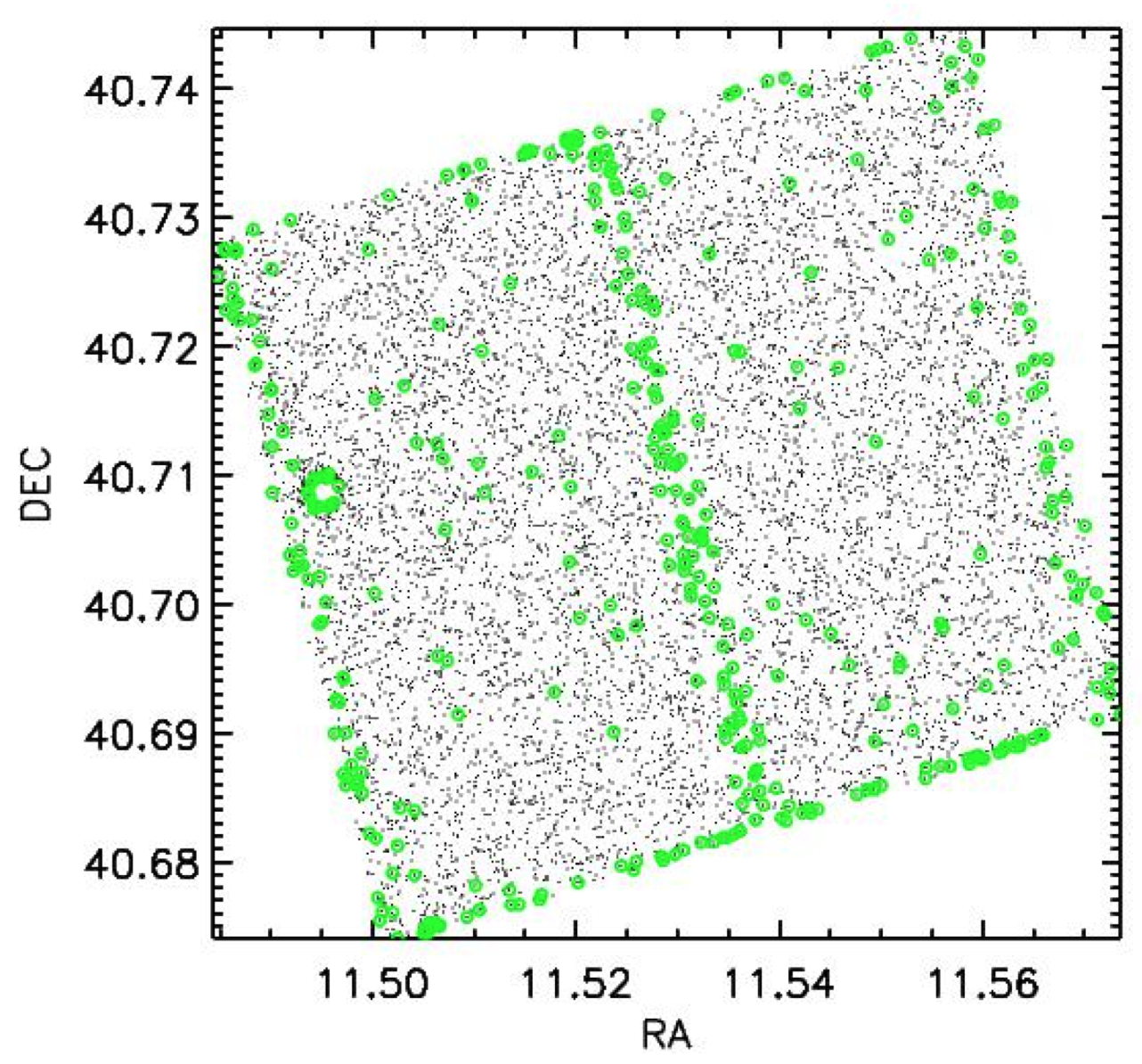

This figure is the byproduct of a project to find variable stars

by searching for outliers in photometric light curves. It reveals that the

photometry along the edges, and the chip gap between detectors, are

photometrically degraded in some images, with outliers that can be mistaken as

potential variables (i.e., the green points). While this complicates the ability to find

variables in these areas, the effect on mean photometric values is believed to be

relatively small in most cases. However, we caution users to be aware of this potential

issue by examining their results for potential edge effects.

The effect appears to be due to insufficient masking along the edges and gaps, but is still under

investigation.

A more detailed analysis of the effect on photometry will be included in this section in the future.

This figure is the byproduct of a project to find variable stars

by searching for outliers in photometric light curves. It reveals that the

photometry along the edges, and the chip gap between detectors, are

photometrically degraded in some images, with outliers that can be mistaken as

potential variables (i.e., the green points). While this complicates the ability to find

variables in these areas, the effect on mean photometric values is believed to be

relatively small in most cases. However, we caution users to be aware of this potential

issue by examining their results for potential edge effects.

The effect appears to be due to insufficient masking along the edges and gaps, but is still under

investigation.

A more detailed analysis of the effect on photometry will be included in this section in the future.

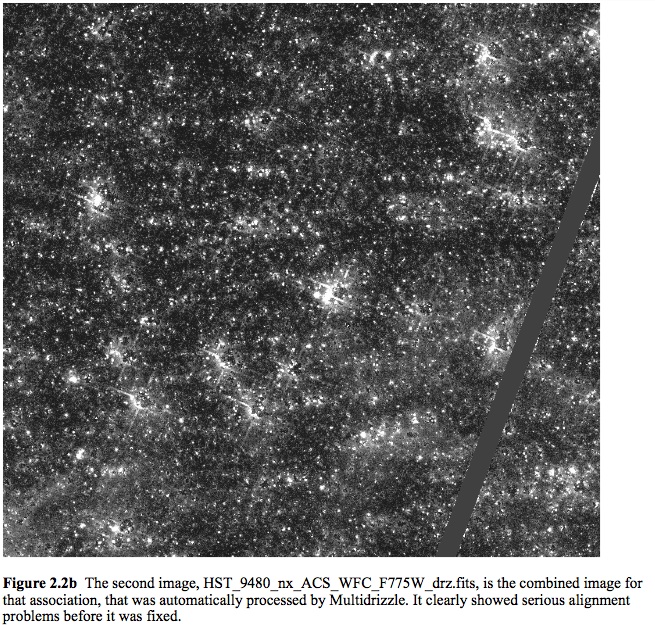

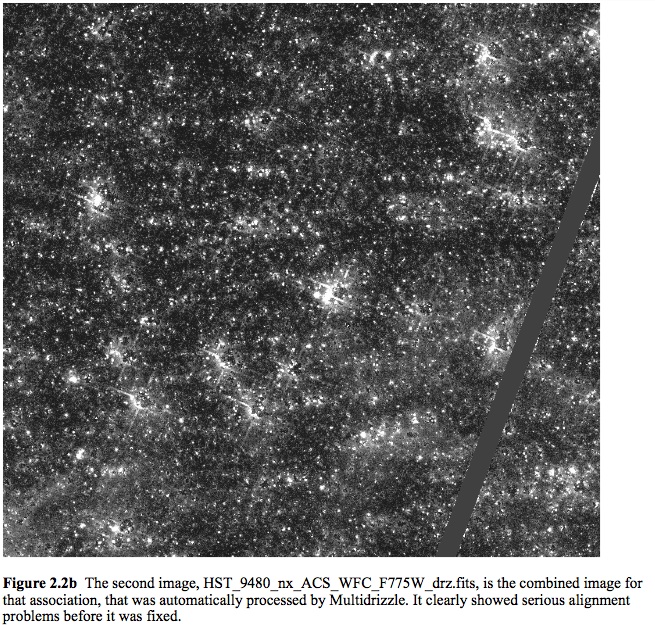

When combining images, the HLA assumes that exposures within a visit

are well aligned. This is generally a good assumption, but

occasionally is not true, especially when combining the observations

from one filter with another. This can result in "smeared" images, bad

photometry (because a position between the offset sources is

measured), and an abnormal Concentration Index.

When combining images, the HLA assumes that exposures within a visit

are well aligned. This is generally a good assumption, but

occasionally is not true, especially when combining the observations

from one filter with another. This can result in "smeared" images, bad

photometry (because a position between the offset sources is

measured), and an abnormal Concentration Index.

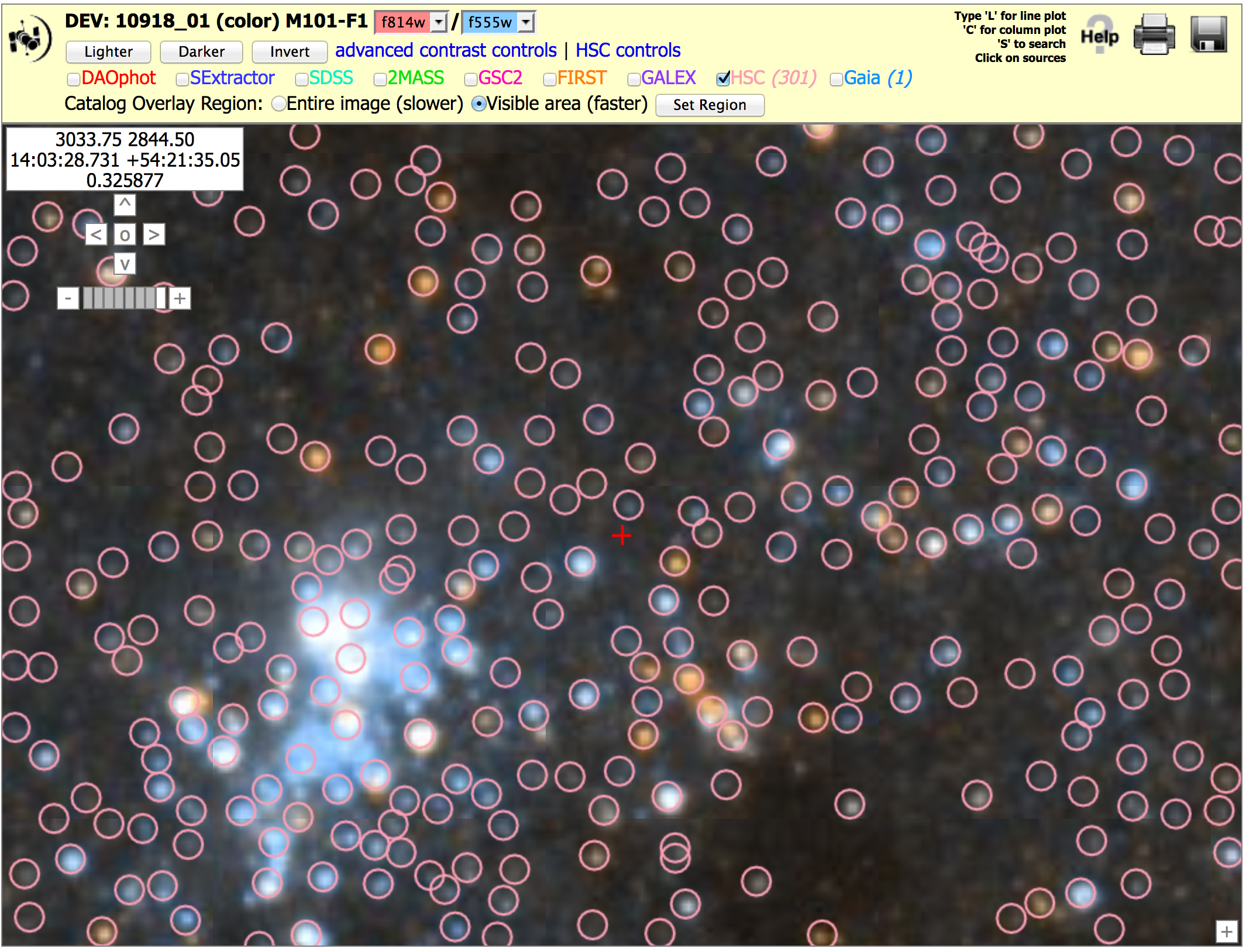

For example, this figure shows how

For example, this figure shows how

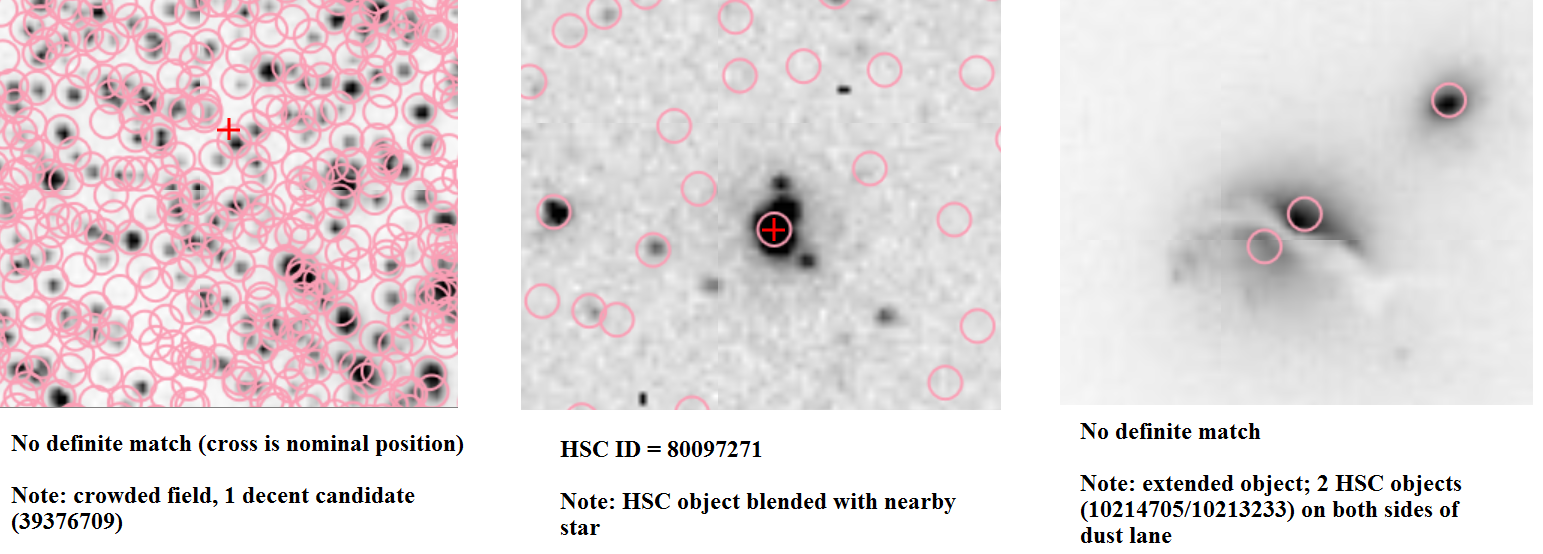

When doing the manual matching, sometimes there were issues with

the imaging data (e.g. crowded field, extended object) that

could impact the quality of the match between the HSC and the spectral data.

This information is included in the notes, as shown in these three examples.

When doing the manual matching, sometimes there were issues with

the imaging data (e.g. crowded field, extended object) that

could impact the quality of the match between the HSC and the spectral data.

This information is included in the notes, as shown in these three examples.

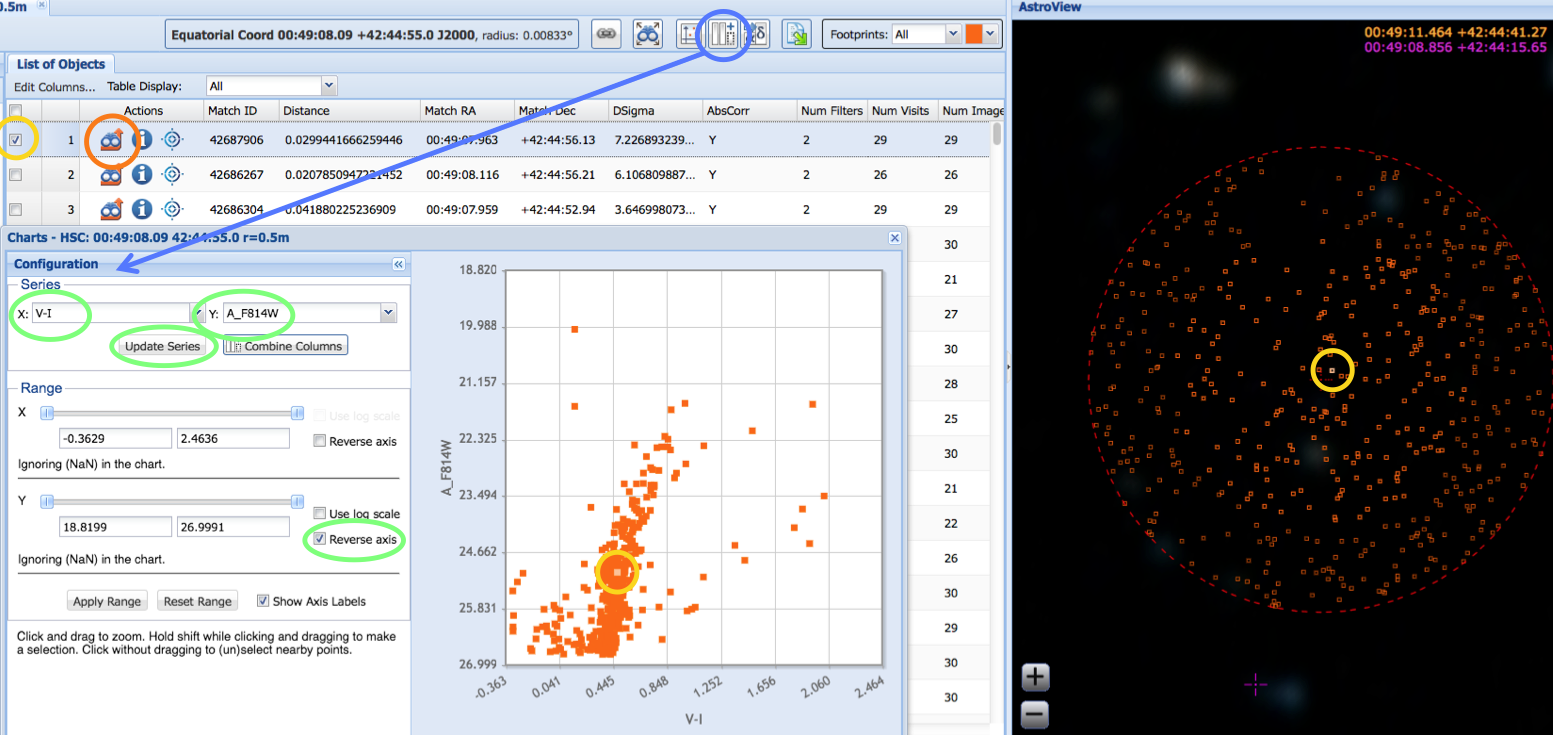

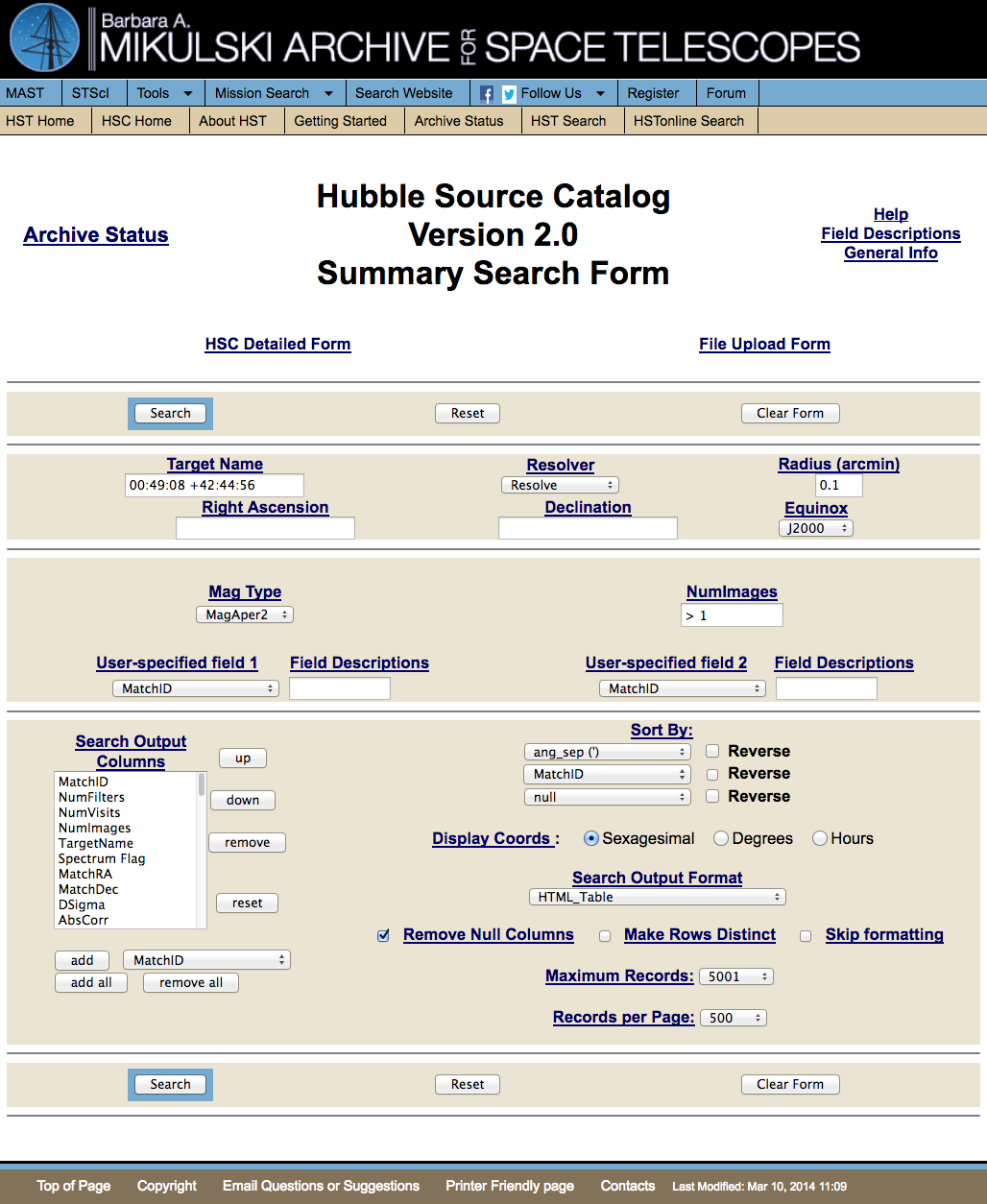

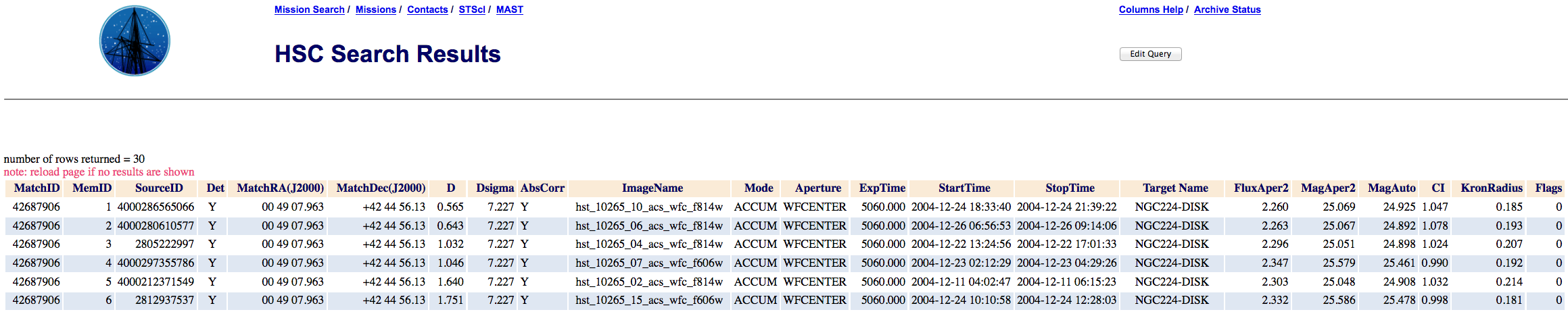

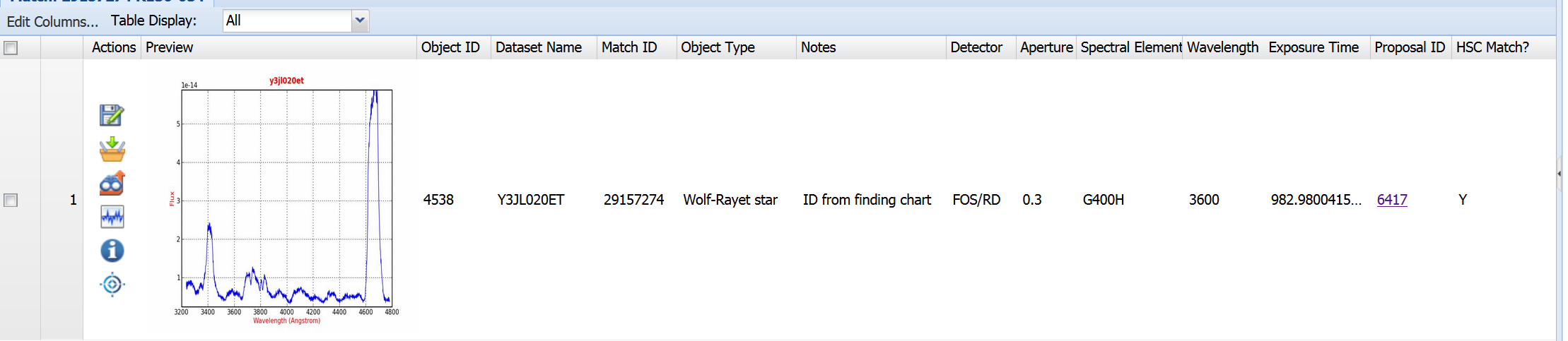

Here is an example of a match used in Use Case #8 (i.e., HSC MatchID=29157274)

Here is an example of a match used in Use Case #8 (i.e., HSC MatchID=29157274)

icon in the MAST Discovery Portal you will

get the HSC photometric summary page for the object. See

icon in the MAST Discovery Portal you will

get the HSC photometric summary page for the object. See  Note that this script assumes:

Note that this script assumes:  icon).

icon).

icon you mention in the use case? I don't see it on the screen.

icon you mention in the use case? I don't see it on the screen.

icon under

Actions (Load Detailed Results)

. This will show you the

details for a particular MatchID, including cutouts for all the HST

images that went into this match. If you then click on the

icon under

Actions (Load Detailed Results)

. This will show you the

details for a particular MatchID, including cutouts for all the HST

images that went into this match. If you then click on the  icon under Action (Toggle Overlay Image) (

icon under Action (Toggle Overlay Image) ( icon for the target of interest.

icon for the target of interest.

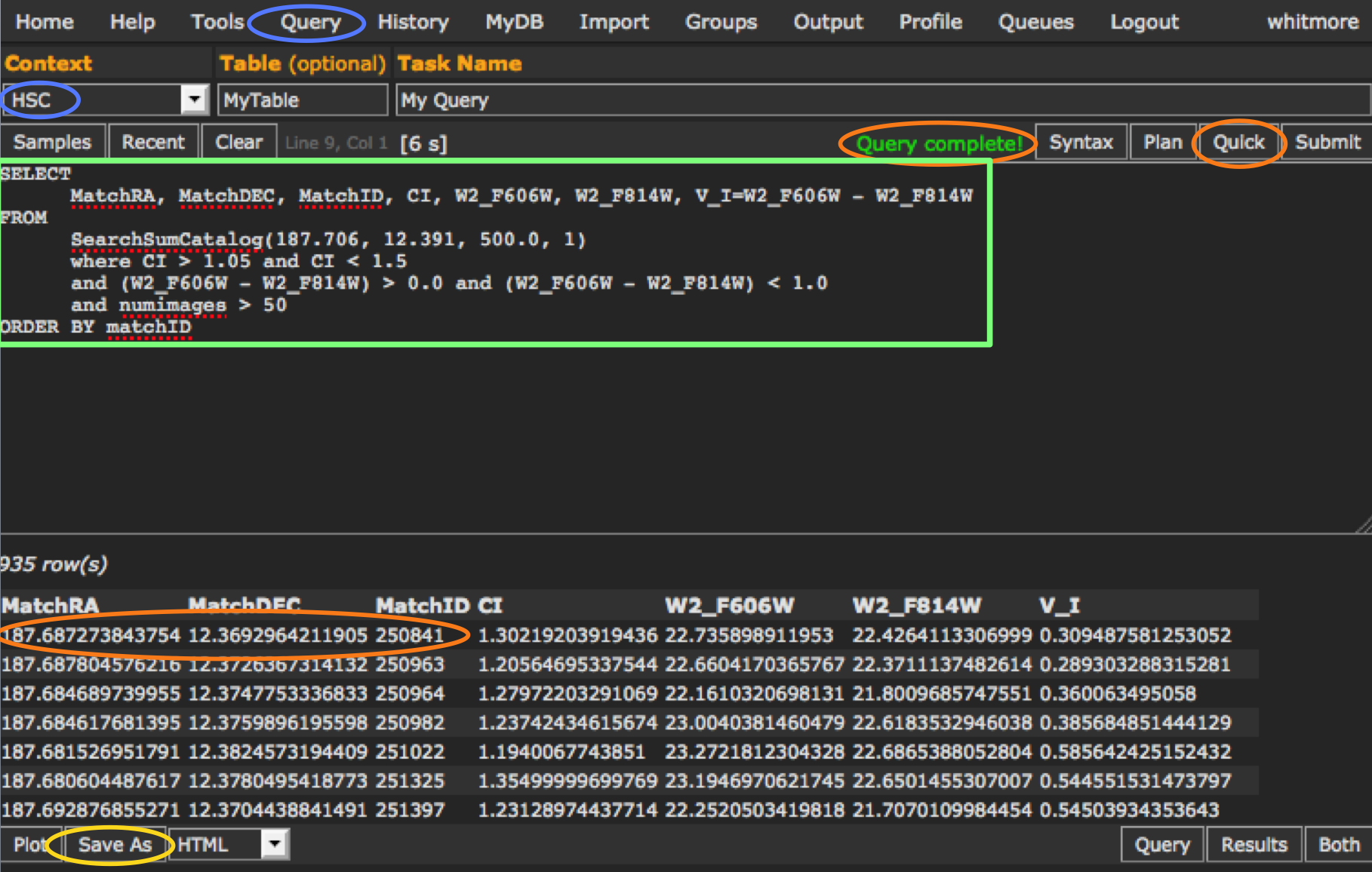

While HSC CasJobs does not

have the limitations of only including a

small subsample of the HSC (i.e., 50,000 objects), as is the

case for the MAST Discovery Portal, it

also does not have the wide variety of

graphic tools available in the Discovery

Portal. Hence the two systems are

complementary.

While HSC CasJobs does not

have the limitations of only including a

small subsample of the HSC (i.e., 50,000 objects), as is the

case for the MAST Discovery Portal, it

also does not have the wide variety of

graphic tools available in the Discovery

Portal. Hence the two systems are

complementary.

In this figure we compare photometry

from version 2 and version 1 in

a field in M31. This figure was made by requiring

the same individual measurements are present in

both versions, so that we are comparing "apples with apples". We find that the agreement is very

good, with typical uncertainties of a few hundreds of a magnitude.

Similar comparisons (e.g., in M87 where most

of the point-like sources are actually resolved

globular clusters) show similar results.

In this figure we compare photometry

from version 2 and version 1 in

a field in M31. This figure was made by requiring

the same individual measurements are present in

both versions, so that we are comparing "apples with apples". We find that the agreement is very

good, with typical uncertainties of a few hundreds of a magnitude.

Similar comparisons (e.g., in M87 where most

of the point-like sources are actually resolved

globular clusters) show similar results.

Yes.

Yes.